Shutting Out Noise and Understanding Artificial Intelligence

Noise: A Flaw in Human Judgment. By Daniel Kahneman, Olivier Sibony and Cass R. Sunstein. New York: Little, Brown Spark. 2021. Pp. ix, 454. Hardcover, $32; paper, $21.99.

You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place. By Janelle Shane. New York: Voracious. 2019. Pp. 259. Hardcover, $28; paper, $18.99.

Introduction

Artificial intelligence (AI) is everywhere. Explanations of AI, accurate or not, are also everywhere. Some give broad overviews of how the technology works, but these broad overviews often explain little more than that AI involves some amount of pattern matching.1See, e.g., Ryan Calo, Artificial Intelligence Policy: A Primer and Roadmap, 51 U.C. Davis L. Rev. 399, 404–05 (2017).

Misunderstanding how AI works or merely treating it as a black box constrains discussions of what AI can, and can’t, do in the law and can lead to improper conclusions. On the other hand, authors of more detailed explanations of AI might assume that the reader is already familiar with some related technical concepts. Moreover, the media’s tendency to produce sensationalized headlines about AI that are misleading or just plain wrong makes it hard to know what issues are pressing and what is simply science fiction.2Oscar Schwartz, ‘The Discourse Is Unhinged’: How the Media Gets AI Alarmingly Wrong, Guardian (July 25, 2018, 6:00 AM), https://www.theguardian.com/technology/2018/jul/25/ai-artificial-intelligence-social-media-bots-wrong [perma.cc/QB3N-K8KG].

Janelle Shane3AI Humorist & Blogger, Senior Research Scientist, Boulder Nonlinear Systems. For more information on Janelle Shane and her work with AI, see Janelle Shane, AI Weirdness, https://www.aiweirdness.com [perma.cc/35JL-HZA9].

manages to strike a balance between informative, technical, accessible, and entertaining in You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place. She explains “how AI works, how it thinks, and why it’s making the world a weirder place” (Shane, p. 5). In her explanations, Shane breaks down what it looks like to use AI to make predictions by providing examples of not only the input data and output, but also the outputs of intermediary steps to better understand how AI “thinks.” She gives the reader a peek under the hood without relying on technical jargon.

Understanding AI at that level is important because it gives insight into where AI excels and where it fails, what it can improve, and what it might exacerbate. And if we treat AI as an impenetrable black box, we run the risk of seeing it as a silver bullet that can fix anything, a novel system free from existing legal frameworks, or even something too scary to approach.

Daniel Kahneman,4Professor of Psychology and Public Affairs Emeritus, Princeton School of Public and International Affairs; Eugene Higgins Professor of Psychology Emeritus, Princeton University; Fellow, Center for Rationality at the Hebrew University of Jerusalem.

Olivier Sibony,5Professor of Strategy and Business Policy, HEC Paris; Associate Fellow, Saïd Business School, Oxford University.

and Cass R. Sunstein6Robert Walmsley University Professor, Harvard University.

fall into exactly this trap of characterizing AI as a silver bullet in Noise: A Flaw in Human Judgment by focusing on the consistency of automation in offering it as a way to reduce noise, which they argue is everywhere but also “rarely recognized” (Kahneman, Sibony and Sunstein, pp. 5–6). However, they ignore what differentiates AI from traditional programming techniques and miss how AI might amplify some types of noise as a result. While the book has received much praise7E.g., Patrick Barry, Noise Pollution, 19 Legal Commc’n & Rhetoric: JALWD 203 (2022) (reviewing Noise); Ken J. Gilhooly & Derek H. Sleeman, To Differ Is Human: A Reflective Commentary on “Noise. A Flaw in Human Judgment”, by D. Kahneman, O. Sibony & C.R. Sunstein (2021). London: William Collins, 36 Applied Cognitive Psych. 724 (2022); Lyle A. Brenner, Daniel Kahneman, Olivier Sibony, and Cass R. Sunstein. Noise: A Flaw in Human Judgment. New York: Little, Brown Spark, 2021. 464 pp. , Hardcover, 67 Admin. Sci. Q. NP69 (2022); Steven Brill, For a Fairer World, It’s Necessary First to Cut Through the ‘Noise’, N.Y. Times (May 18, 2021), https://www.nytimes.com/2021/05/18/books/review/noise-daniel-kahneman-olivier-sibony-cass-sunstein.html [perma.cc/GR3L-4GAR].

and found itself on the New York Times bestseller list,8Best Sellers: Hardcover Nonfiction, N.Y. Times (June 27, 2021), https://www.nytimes.com/books/best-sellers/2021/06/27/hardcover-nonfiction [perma.cc/YM3T-WMMX].

it has also received criticism for inaccuracies about statistics, half-baked discussions, and omissions of prior scholarship in its effort to bring these statistical concepts to a wider audience.9E.g., Andrew Gelman, Thinking Fast, Slow, and Not at All: System 3 Jumps the Shark, Stat. Modeling, Causal Inference, & Soc. Sci. (May 23, 2021, 5:16 PM), https://statmodeling.stat.columbia.edu/2021/05/23/thinking-fast-slow-and-not-at-all-system-3-jumps-the-shark [perma.cc/TP98-T5BC] (noting W.E. Deming and Fischer Black as examples of statisticians and economists who have published pieces about noise); Caroline Criado Perez, Noise by Daniel Kahneman, Olivier Sibony and Cass Sunstein Review – The Price of Poor Judgment, Guardian (June 3, 2021, 2:30 AM), https://www.theguardian.com/books/2021/jun/03/noise-by-daniel-kahneman-olivier-sibony-and-cass-sunstein-review-the-price-of-poor-judgment [perma.cc/29B9-9VP4].

This Notice uses Shane’s helpful AI explainer to take a closer look at Kahneman, Sibony, and Sunstein’s contention that using AI to make judgments could remove noise from decisionmaking. Armed with Shane’s understanding of AI, the Notice seeks to explain what Kahneman, Sibony, and Sunstein gloss over in their discussions of algorithms and AI,10Criado Perez, supra note 9.

which lack the depth and nuance present in other parts of the book. In doing so, the Notice also presents some lessons for evaluating AI systems—an urgent task as our legal system is increasingly confronted by, and integrated with, artificial intelligence.11See generally, e.g., Anya E.R. Prince & Daniel Schwarcz, Proxy Discrimination in the Age of Artificial Intelligence and Big Data, 105 Iowa L. Rev. 1257, 1259–62 (2020); Bobby Chesney & Danielle Citron, Deep Fakes: A Looming Challenge for Privacy, Democracy, and National Security, 107 Calif. L. Rev. 1753 (2019); W. Nicholson Price II, Problematic Interactions Between AI and Health Privacy, 2021 Utah L. Rev. 925; Margot E. Kaminski, Authorship, Disrupted: AI Authors in Copyright and First Amendment Law, 51 U.C. Davis L. Rev. 589 (2017); Pauline T. Kim & Matthew T. Bodie, Artificial Intelligence and the Challenges of Workplace Discrimination and Privacy, 35 A.B.A. J. Lab. & Emp. L. 289 (2021).

I. Noise

Kahneman, Sibony, and Sunstein argue that statistical bias12Kahneman, Sibony, and Sunstein use “bias” in the statistical sense and not as a synonym for social discrimination. Kahneman, Sibony & Sunstein, p. 66. In this piece, I use “statistical bias” to avoid confusion with social discrimination and other biases.

and noise contribute independently to judgment errors, and therefore, the reduction of noise is (in principle) as important as the reduction of statistical bias.13See Kahneman, Sibony & Sunstein, p. 66.

They focus on what they dub “professional judgments” (Kahneman, Sibony and Sunstein, p. 43), such as hiring decisions, weather forecasts, and insurance quotes. Broadly, judgments can be broken down into different types by the task at hand. Predictive judgments have some sense of a “right” answer while evaluative judgments seek to measure the degree of something (such as the quality of restaurants) or resolve tradeoffs (such as in hiring decisions) (Kahneman, Sibony and Sunstein, p. 51).

A. What Is Noise?

To illustrate statistical bias and noise, Kahneman, Sibony, and Sunstein present a quick game: take a stopwatch with a lap function and, without looking at the stopwatch, produce five consecutive laps of ten seconds each (Kahneman, Sibony and Sunstein, p. 40). Even though the goal is to get five laps of exactly ten seconds, your laps likely vary in duration (Kahneman, Sibony and Sunstein, p. 40). That variability is noise. If all of your laps ran consistently shorter or longer than ten seconds, the difference between ten seconds and the average duration of your laps is statistical bias (Kahneman, Sibony and Sunstein, p. 41).

Kahneman, Sibony, and Sunstein stress that “[a] defining feature of system noise is that it is unwanted” (Kahneman, Sibony and Sunstein, p. 27). Variability itself is not inherently negative—diversity in thought enhances creativity and decisionmaking by encouraging novel information and perspectives.14Katherine W. Phillips, How Diversity Makes Us Smarter, Sci. Am. (Oct. 1, 2014), https://www.scientificamerican.com/article/how-diversity-makes-us-smarter [perma.cc/7CZ2-SLUG].

By defining noise as unwanted from the outset, Kahneman, Sibony, and Sunstein also avoid the question of what determines desirability. This tactic of narrowing scope to be able to make simplistic conclusions later is something they employ in their treatment of AI as well.

Using the scenario of judges determining sentences for criminal defendants, Kahneman, Sibony, and Sunstein define a few types of noise.15While these map to existing statistical concepts, Kahneman, Sibony, and Sunstein sometimes create their own terminology in an effort to avoid technical jargon. See, e.g., Kahneman, Sibony & Sunstein, p. 76.

First is the umbrella concept of system noise, which is where the same case results in different judgments when presented to different individuals (Kahneman, Sibony and Sunstein, p. 78). System noise has two major components—level noise and pattern noise—pattern noise being the bigger contributor to system noise (Kahneman, Sibony and Sunstein, pp. 78, 212). Level noise is caused by the variation between different individuals, such as the difference in a lenient judge’s versus a more “average” one’s tendencies in sentencing (Kahneman, Sibony and Sunstein, p. 74). The variation caused by level noise depends not on the case or defendant, but on the judge (Kahneman, Sibony and Sunstein, p. 74).

The other component, pattern noise, refers to how an individual’s decisions vary by case (Kahneman, Sibony and Sunstein, p. 78). Pattern noise itself can be broken down into two components (Kahneman, Sibony and Sunstein, pp. 203–04). Stable pattern noise, the larger component, encompasses relatively consistent factors that affect a decisionmaker (Kahneman, Sibony and Sunstein, pp. 203, 213). For example, a judge might impose harsher sentences for certain types of crimes.16See Kahneman, Sibony & Sunstein, pp. 75–76.

Occasion noise, the other part of pattern noise, is the randomness caused by whatever might be going on at the particular moment a decision is made, whether it be the decisionmaker’s mood or the weather outside.17See Kahneman, Sibony & Sunstein, pp. 77, 86, 203–04.

Kahneman, Sibony, and Sunstein discuss how occasion noise is difficult to measure because when people make a “carefully considered professional opinion,” they want to believe that it was based on convincing arguments, not random happenstance (Kahneman, Sibony and Sunstein, p. 81). The same, however, could also be said for stable pattern noise. If a subset of judges is particularly harsh towards defendants of color, that would fall under stable pattern noise, but those judges probably wouldn’t want to admit that the defendant’s race plays a role in their “carefully considered” determinations. And a lenient judge that causes level noise may not be quick to give themselves that label either.

Kahneman, Sibony, and Sunstein also walk through various sources of noise. These include scenarios in group decisionmaking, where who speaks first or last has an outsized impact on the final judgment,18Kahneman, Sibony & Sunstein, p. 94. This phenomenon differs from the “wisdom-of-crowds effect,” where independent judgments of many individuals can average out to a strikingly accurate judgment. Kahneman, Sibony & Sunstein, pp. 83, 99; see also Brad DeWees & Julia A. Minson, The Right Way to Use the Wisdom of Crowds, Harv. Bus. Rev. (Dec. 20, 2018), https://hbr.org/2018/12/the-right-way-to-use-the-wisdom-of-crowds [perma.cc/HB6A-35H5].

as well as a variety of sources rooted in psychological biases (Kahneman, Sibony and Sunstein, ch. 13). Judgments that require rating something on a scale or against a set of descriptive phrases also cause noise (Kahneman, Sibony and Sunstein, p. 186). This is not necessarily because the decisionmakers substantively disagree with one another, but instead it might be because they interpret the scale options differently (Kahneman, Sibony and Sunstein, p. 189). For example, research has shown that the ambiguity in legal standards—such as “clear and convincing evidence,” “probable cause,” and “reasonable”—causes difficulties in communication, and that difficulty translates to noisy judgments and jury decisions.19See Kahneman, Sibony & Sunstein, p. 189; District of Columbia v. Wesby, 583 U.S. 48, 64 (2018) (describing how there is no precise definition for “probable cause”); Benjamin C. Zipursky, Reasonableness in and out of Negligence Law, 163 U. Pa. L. Rev. 2131, 2135 (2015). And that’s before getting to less well-known standards, like “reasonably probable.” See, e.g., Young v. Corr. Healthcare Cos., No. 13-cv-315, 2024 WL 866286, at *2 (N.D. Okla. Feb. 29, 2024).

B. Addressing Noise

After walking through what noise is and its prevalence in all kinds of human decisions, Kahneman, Sibony, and Sunstein provide some thoughts on how to reduce this unwanted variation in outcomes and thus how to improve judgments. They start with the identification of noise using a backward-looking approach: “Noise is inherently statistical: it becomes visible only when we think statistically about an ensemble of similar judgments” (Kahneman, Sibony and Sunstein, p. 219). They suggest using noise audits for this identification process (Kahneman, Sibony and Sunstein, p. 221) and provide a guide for approaching the logistics and assembling a team for such a process (Kahneman, Sibony and Sunstein, app. A).

They also argue that the focus should be on pattern noise because they believe that level noise should be “a relatively easy problem to measure and address” (Kahneman, Sibony and Sunstein, p. 216). However, this relative ease is due in part to their initial caveat that noise is only unwanted variation. The line between level noise and beneficial variance doesn’t seem like it would always be clear. Using the sentencing example, between the lenient judges and the “average” ones, why is it the lenient judges that are labeled as noisy?

The question of what makes differences in judgment unwanted also invites the question of where societal bias fits into this world of noise and statistical bias. Kahneman, Sibony, and Sunstein note that statistical bias is not synonymous with our societal use of the word (Kahneman, Sibony and Sunstein, p. 66), but they also conceptualize societal bias as a subset of statistical bias distinct from noise (Kahneman, Sibony and Sunstein, p. 71). And while it makes sense that societal bias on a systemic level would lead to statistical bias, it isn’t entirely clear why some individual biases couldn’t manifest as stable pattern noise. For example, with caste discrimination, which antidiscrimination laws are starting to address,20See, e.g., Alexandra Yoon-Hendricks, Seattle Has Banned Caste Discrimination. Here’s How the New Law Works, Seattle Times (Feb. 26, 2023, 3:26 PM), https://www.seattletimes.com/seattle-news/politics/seattle-has-banned-caste-discrimination-heres-how-the-new-law-works [perma.cc/CYM7-X7MW].

discriminatory conduct is likely to come from only a subset of the population that knows what to look for in determining a person’s caste.21See, e.g., Rough Translation, How to Be an Anti-Casteist, NPR (Sept. 30, 2020, 5:30 PM), https://www.npr.org/transcripts/915299467 [perma.cc/2VFA-63DE] (describing how Indian tech workers experience the caste system in the United States and noting that the code for signaling one’s caste is a “thing that many people in the U.S. just don’t see”).

After identifying noise, the question is then how to reduce it. Kahneman, Sibony, and Sunstein discuss some strategies that are easier said than done, like getting better judges (Kahneman, Sibony and Sunstein, ch. 18) or correcting judgments after they have been made (Kahneman, Sibony and Sunstein, pp. 236–37). When described in the abstract, these “fixes” aren’t particularly compelling as tangible, implementable solutions. In the other direction, Kahneman, Sibony, and Sunstein provide case studies and recommendations for some specific fields, including forensic science, medicine, and hiring (Kahneman, Sibony and Sunstein, chs. 20–25). The lessons from these case studies aren’t necessarily generalizable, but maybe the point is that noise reduction solutions should be specific to the application.

Of interest to this Notice is Kahneman, Sibony, and Sunstein’s exploration of automated decisionmaking, from simple statistical models to AI (Kahneman, Sibony and Sunstein, chs. 9–12). They see mechanical decisionmaking, in which there is a set of rules and no room for any discretion, as free from noise (Kahneman, Sibony and Sunstein, p. 124). They present different types of rules and algorithms, ranging from simple rules (“hire anyone who completed high school”) to AI (Kahneman, Sibony and Sunstein, pp. 113, 124). They acknowledge the potential for discrimination and bias in algorithms, but they also believe that “[i]n principle, we should be able to design an algorithm that does not take account of race or gender” (Kahneman, Sibony and Sunstein, p. 335). As we will see, it’s not clear that is true.

II. Artificial Intelligence

AI is a broad concept that encompasses the combination of human intelligence and machines (computers).22Educ. Ecosystem (LEDU), Clearing the Confusion: AI vs Machine Learning vs Deep Learning Differences, Towards Data Sci. (Sept. 14, 2018), https://towardsdatascience.com/clearing-the-confusion-ai-vs-machine-learning-vs-deep-learning-differences-fce69b21d5eb [perma.cc/2KAJ-ZPNZ].

AI doesn’t include robots reading scripts or humans pretending to be AI.23Shane, p. 8. It may sound obvious that humans don’t count as AI, but technology that claims to be “fully automated” can still be powered by humans. Shane, ch. 9; Olivia Solon, The Rise of ‘Pseudo-AI’: How Tech Firms Quietly Use Humans to Do Bots’ Work, Guardian (July 6, 2018, 3:01 AM), https://www.theguardian.com/technology/2018/jul/06/artificial-intelligence-ai-humans-bots-tech-companies [perma.cc/TRF9-FRLE]; see also Russell Brandom, When AI Needs a Human Assistant, Verge (June 12, 2019, 10:02 AM), https://www.theverge.com/2019/6/12/18661657/amazon-mturk-google-captcha-robot-ai-artificial-intelligence-mechanical-turk-humans [perma.cc/BBH8-VK5U].

In her book, Shane uses “AI” to refer specifically to a type of AI known as machine learning, a subset of AI where computers “learn” by looking at examples.24Shane, pp. 8–9; Educ. Ecosystem (LEDU), supra note 22. In this Notice, I use “AI” as Shane does to refer specifically to machine learning.

There are substantial differences between traditional rules-based programming and machine learning,25And rules-based programming that seems to mimic human intelligence should not be confused with AI. See supra note 23 and accompanying text.

and to categorize machine learning models as noiseless models (as Kahneman, Sibony, and Sunstein do) fails to recognize how machine learning and AI differ from traditional rules-based programming.

A. What Is AI?

Before delving into the specifics of AI, it might help to illustrate traditional rules-based programming.26If you’re already familiar with how computer programming and AI work, you should still read this Section for Shane’s fun examples.

Shane uses an example of writing a program that generates knock-knock jokes. Because this kind of programming relies on the programmer to understand how to build a knock-knock joke, the first step is creating a formula for the underlying structure of the joke:

Knock, knock.

Who’s there?

[Name]

[Name] who?

[Name] [Punchline] (Shane, p. 9)

This formula captures the common parts of a knock-knock joke, based on the programmer’s knowledge of how these jokes work. By doing so, the formula then reduces the task to coming up with names and punchlines (Shane, p. 9). The programmer can then provide lists of possibilities for “[Name]” and for “[Punchline]” for the computer to plug into the formula (Shane, pp. 9–10). This set of rules for creating a knock-knock joke (that is, start with the formula, plug in a name, and plug in a punchline) constitutes an algorithm, a general term for “a step-by-step procedure for solving a problem or accomplishing some end.”27Algorithm, Merriam-Webster, https://www.merriam-webster.com/dictionary/algorithm [perma.cc/FX33-DTFB] (last updated Mar. 7, 2024).

The traditional approach works well when the programmer has some clear rules to work with. And it is “noiseless” in the way that Kahneman, Sibony, and Sunstein see algorithms as a method of noise reduction.28See Kahneman, Sibony & Sunstein, pp. 119–20.

Because the programmer explicitly defines the set of rules that the computer follows, the results are fairly predictable. The knock-knock jokes will be structured according to the formula, and the names and punchlines will come directly from the list provided by the programmer. No matter how many times you ask the computer to generate knock-knock jokes using this algorithm, it will produce the same jokes every time; thus, there is no noise.

AI, on the other hand, excels when “the rules are really complicated or just plain mysterious” (Shane, p. 19). This is also where the practicability of algorithms as a noiseless solution gets murkier. With AI, the programmer does not tell the computer the rules for constructing a knock-knock joke (Shane, p. 11). Instead, the programmer asks the computer to create a model—the derived set of rules for constructing a knock-knock joke.29See Sara Brown, Machine Learning, Explained, MIT Sloan Sch. of Mgmt. (Apr. 21, 2021), https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained [perma.cc/E2DY-CEFS].

The computer gets a set of existing knock-knock jokes in what looks to the computer like a list of random letters and punctuation (Shane, p. 11). That list of random letters and punctuation is just a string of data; the computer has no concept of what a letter is.30See Shane, p. 112.

This is the training dataset.31See id. In practice, some of the data is often set aside for testing the AI model later—these are known as validation and test datasets. Id.; Training Set vs Validation Set vs Test Set, Codecademy, https://www.codecademy.com/article/training-set-vs-validation-set-vs-test-set [perma.cc/S2TE-XNDC] (last updated Apr. 11, 2023).

With the training data, the computer attempts to create a joke, compares that to the existing jokes, and then adjusts the model to make a better guess next time (Shane, p. 11). The instructions for how to adjust the model are provided by the programmer via a rules-based program; these instructions make up an AI algorithm that is used to train the model.32See Artificial Intelligence (AI) Algorithms: A Complete Overview, Tableau, https://www.tableau.com/data-insights/ai/algorithms [perma.cc/C39W-3NNK]. This is often what the word algorithm evokes when used today. See Kahneman, Sibony & Sunstein, p. 123.

After one round, Shane’s model produces this knock-knock joke:

k k k k k

kk k kkkok

k kkkk

k

kk

kk k kk

keokk k

k

k (Shane, p. 11–12)

It isn’t even close to a series of words, let alone a coherent joke. The model seems to have simply noticed that the most common characters are “k,” space, and line break. After many more rounds, the attempts start to look better (Shane, pp. 12–14). For example, at some point the model begins to produce the underlying formula but still fails at producing actual words for the name and punchline:

Knock Knock

Who’s There?

Bool

Hane who?

Scheres are then the there (Shane, p. 14–15)

After more rounds of training, some actual jokes emerge, though many of them are “partially plagiarized from jokes in the training dataset” (Shane, p. 16). That “plagiarizing” is a consequence of the learning process; the data drives what the model learns. Eventually in this example, there is one original and intelligible joke:

Knock Knock

Who’s There?

Alec

Alec who?

Alec- Knock Knock jokes. (Shane, p. 17)

Even though the model did finally write its own joke, that doesn’t mean AI can understand knock-knock jokes or English-language puns (Shane, p. 17). It was one joke among many attempts. This throwing-spaghetti-at-the-wall-and-seeing-what-sticks approach is a slow and iterative learning process that requires large quantities of examples.33See Shane, p. 43.

The “magic” of machine learning is the ability to try over and over again at a speed humans could never replicate—with the right setup, a computer can learn “in sped-up time, amassing hundreds of years’ worth of training in just a few hours.”34Shane, p. 44 (describing an AI program designed to play an online multiplayer game that accumulated 180 years of gaming time per day by playing itself in tens of thousands of games simultaneously).

This does not take away from the creative result, but it is important to understand that there is no creative intent behind the new knock-knock joke.

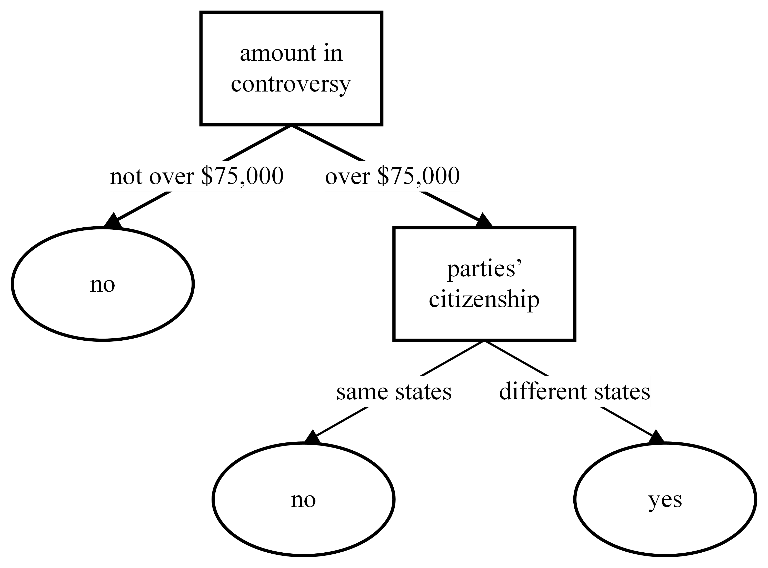

Different AI algorithms have different ways of figuring out rules and storing those rules in models. An example of a relatively simple model is a decision tree, which is basically a flowchart that has no loops.35Shane, p. 88. Decision trees may also be represented as a series of if-then rules. Tom M. Mitchell, Machine Learning 52 (1997).

Different AI algorithms have different ways of figuring out rules and storing those rules in models. An example of a relatively simple model is a decision tree, which is basically a flowchart that has no loops.35Shane, p. 88. Decision trees may also be represented as a series of if-then rules. Tom M. Mitchell, Machine Learning 52 (1997).

To take an example likely found in some law school outlines, a decision tree for determining whether there is diversity jurisdiction under 28 U.S.C. § 1332(a)(1) might look like this:

In this example, the model makes it clear what goes into a decision—it is interpretable. But not all models are like this. Others are “opaque” because they cannot be easily drawn or do not convey concrete steps for how to arrive at a particular outcome.36Jenna Burrell, How the Machine ‘Thinks’: Understanding Opacity in Machine Learning Algorithms, Big Data & Soc’y, Jan.–June 2016, at 1, 1.

These latter types of models have probably contributed to the notion in legal scholarship that machine learning is an unexplainable black box, but machine learning isn’t inherently so.37David Lehr & Paul Ohm, Playing with the Data: What Legal Scholars Should Learn About Machine Learning, 51 U.C. Davis L. Rev. 653, 706 (2017).

AI models can also be reused and repurposed in a process called transfer learning (Shane, p. 47). For example, a model that has already been trained on names of metal bands has already learned some basics of forming words, such as common letter combinations (Shane, p. 45). That model can then be retrained to generate names of other things, such as ice cream flavors (Shane, pp. 45–47). Because the AI model is already partway to the second goal, transfer learning requires less data and time than starting from scratch (Shane, p. 47). This can be helpful when training a model from scratch might be cost prohibitive due to the difficulty of obtaining enough data or the cost of computing resources.38See Qingwei Li, David Ping & Lauren Yu, Fine-Tuning a PyTorch BERT Model and Deploying It with Amazon Elastic Inference on Amazon SageMaker, AWS Mach. Learning Blog (July 15, 2020), https://aws.amazon.com/blogs/machine-learning/fine-tuning-a-pytorch-bert-model-and-deploying-it-with-amazon-elastic-inference-on-amazon-sagemaker [perma.cc/A3WK-3S6M].

Even though the training process is shorter, this shouldn’t be mistaken for some sort of incomplete training. An AI model for generating metal bands that is retrained to generate ice cream flavors quickly moves on from “Dirge of Fudge” and “Silence of Coconut” to “Lemon-Oreo” and “Toasted Basil” (Shane, pp. 45–47).

Once a model has been trained, the next step is to test it to ensure that it is ready for use.39Abid Ali Awan, The Machine Learning Life Cycle Explained, DataCamp (Oct. 2022), https://www.datacamp.com/blog/machine-learning-lifecycle-explained [perma.cc/HV9Y-R3WA]; see also supra note 31.

If the model is to be used widely (that is, not just as a one-time experiment to generate ice cream flavors), then it is deployed to a system such as a website or software application.40Awan, supra note 39.

Whether the AI model will be continually updated with new data or remain as is depends on the setup.41See id.

Additionally, many AI systems in practice consist of a combination of multiple AI models and systems (Shane, p. 106).

Today, a lot of AI is deep learning, which is a subset of machine learning that draws inspiration from the biological neural networks in the human brain.42Educ. Ecosystem (LEDU), supra note 22; see Jeffrey Dean, A Golden Decade of Deep Learning: Computing Systems & Applications, Dædalus, Spring 2022, at 58, 59; Shane, p. 62.

In her explanation of deep learning, Shane names only artificial neural networks, but there are many types of artificial neural networks, as well as other algorithms.43See generally Iqbal H. Sarker, Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions, SN Comput. Sci., Aug. 2021, at 1, 5–14, https://link.springer.com/article/10.1007/s42979-021-00815-1 [perma.cc/LFR7-GNH9].

Deep learning refers to “neural networks with many layers.”44Brown, supra note 29.

Neural networks, although far simpler than the human brain, can require large amounts of computing power in real-world applications.45See Shane, p. 62; Dean, supra note 42, at 58–59.

Originally invented in the 1950s to test theories about how the human brain works, neural networks saw little progress by the 1990s before experiencing a resurgence in the last decade—a resurgence enabled in part by hardware innovations in the last decade and impressive results from deep learning models.46Shane, p. 62; see also Dean, supra note 42, at 58–61.

Neural networks also power large language models (LLMs), which became quite popular with the introduction of ChatGPT in late 2022.47See Timothy B. Lee & Sean Trott, A Jargon-Free Explanation of How AI Large Language Models Work, Ars Technica (July 31, 2023, 7:00 AM), https://arstechnica.com/science/2023/07/a-jargon-free-explanation-of-how-ai-large-language-models-work [perma.cc/W6DN-KY32].

Neural networks consist of simple units commonly known as neurons (Shane, p. 62). Each neuron takes some numbers as inputs, does a calculation of some kind, and then produces a number as an output.48 Mitchell, supra note 35, at 82.

That output may then serve as an input for other neurons.49Id.

To illustrate how a neural network works, Shane uses an example task of creating sandwiches from a provided list of sandwich fillings, explaining how a model can learn to favor ingredients like peanut butter and marshmallow while avoiding eggshells and mud, paired with easy-to-understand images of what a very basic neural network can look like (Shane, pp. 63–75). There can be an element of randomness to the training process,50See Shane, pp. 72–73.

and that can lead to a differently trained model when training from scratch, even with the same training data.51Jason Brownlee, What Does Stochastic Mean in Machine Learning?, Mach. Learning Mastery (July 24, 2020), https://machinelearningmastery.com/stochastic-in-machine-learning, [perma.cc/4QM2-4SSV].

B. Noisy AI?

Understanding the technical details of how AI algorithms learn to accomplish tasks is only part of the equation. While AI can accomplish some amazing and sometimes unbelievable feats, it is also still prone to a host of failures, which “can range from slightly annoying to quite serious” (Shane, p. 109). The reasons why AI can produce unwanted results also show that using AI to reduce noise requires more nuance than what Kahneman, Sibony, and Sunstein contend.

1. Data Issues

Because AI development relies so heavily on data to train the models,52See Shane, p. 44.

the quantity and quality of the training data53For this Section, I use “training data” to refer to any data that is used in the development of an AI model, including test or validation sets created from the initial set of data.

impact the quality of the AI predictions. To start, if there is not enough data, the AI model will be limited in what it can predict. For example, if a dataset of eight ice cream flavors is used to train a model that generates new ice cream flavors, the algorithm may learn how to simply regurgitate the eight flavor names (Shane, pp. 111–14). “Memorizing the input examples isn’t the same as learning how to generate new flavors,” Shane observes, “In other words, this algorithm has failed to generalize” (Shane, p. 114).

The words that comprise the ice cream flavors do not embed any information about what makes an ice cream flavor.54See Shane, p. 111.

Spotting the connection between “chocolate,” “peanut butter chip,” and “mint chocolate chip” is something that the human brain does automatically and effortlessly,55See Daniel Kahneman, Thinking, Fast and Slow 51 (2011).

but that same task requires a computer to do much more. To the computer, a list of ice cream flavors is simply a list of letters and whitespace, as if you are spelling out the list to be transcribed one character at a time: “c, h, o, c, o, l, a, t, e, line break, p, e, a, n, u, t, space, b, u, t, t, e, r,” and so on.56It’s actually a list of numbers, with each number corresponding to a character. See Shane, p. 112.

Like in the knock-knock joke example, the algorithm is learning which letter or whitespace should come next. It is also limited to the characters in the training data; because the letter “f” never appears in the original flavors, the model will never guess words like “toffee” or “fudge” (Shane, p. 112). On the other hand, training with over 2,000 examples produces original flavors like “milky ginger chocolate peppercorn” and “carrot beer” (Shane, p. 114).

This example illustrates how the algorithm is missing data about the world (Shane, p. 134), and it also goes back to how the joke-generating AI model didn’t understand English or humor. Even with the longer list of ice cream flavors, the model did not know it was looking for words that represent ingredients to combine with the cold and creamy sweet treat that is ice cream. In real-world applications, this can lead to ill-fitting predictions. For example, Google’s keyboard predictions at one point suggest “funeral” if the user typed, “I’m going to my Grandma’s” (Shane, p. 136). Similarly, some Facebook users found themselves reminded of unwelcome memories highlighted in their “Year in Review.”57Alex Hern, Facebook Apologises over ‘Cruel’ Year in Review Clips, Guardian (Dec. 29, 2014, 10:11 AM), https://www.theguardian.com/technology/2014/dec/29/facebook-apologises-over-cruel-year-in-review-clips [perma.cc/8KS7-9CCG].

Limited data also invites concern around how well the data represents the real world.58See Shane, p. 128.

In the ice cream example, a model might learn that every flavor needs to contain “chocolate” or “chip” in the name. This AI version of availability bias, relying too heavily on what is available,59A. Banerjee & D. Nunan, Availability Bias, Catalogue of Bias (2019), https://catalogofbias.org/biases/availability-bias [perma.cc/86FL-AZLH].

can lead to a problem known as overfitting.60See Shane, pp. 114, 169.

Instead of generalizing based on the training data, an AI model can learn to mimic the data too well—down to whatever minor quirks we might consider noise.61See Shane, p. 114.

Because AI doesn’t understand any meaning behind the training data and blindly identifies patterns, it cannot on its own determine what is unwanted variance in the data and may very well learn the patterns of that noise.

In blindly looking for patterns, AI can also be prone to chasing red herrings (Shane, p. 120). For example, Shane trained a neural network on Buzzfeed articles to generate new listicle titles in English, and it produced some headlines that appeared to use a mix of foreign languages, like “29 choses qui aphole donnar desdade” (Shane, pp. 123–24). It turned out that half of the headlines in the dataset were in a language other than English, and the neural net did not know multiple languages existed or that English was the target language (Shane, p. 124). Limiting the training data to only English examples meant that the neural net could learn one language well instead of several languages poorly (Shane, pp. 124–25). This “[z]eroing in on extraneous information” is also a common failure in AI applications for generating and recognizing images (Shane, p. 125).

Although it was easy in this example to simply remove the foreign language headlines from the training data, it’s not always that straightforward. As Kahneman, Sibony, and Sunstein detail, humans rating things on scales can produce noisy data when people interpret possible answers on a survey differently (Kahneman, Sibony and Sunstein, ch. 15). This can come into play with human-annotated datasets, like a dataset used for analyzing the emotions behind text.62Marc Herrmann, Martin Obaidi, Larissa Chazette & Jil Klünder, On the Subjectivity of Emotions in Software Projects: How Reliable Are Pre-Labeled Data Sets for Sentiment Analysis?, J. Sys. & Software, Nov. 2022, at 1, 1, https://www.sciencedirect.com/science/article/pii/S0164121222001431 [perma.cc/EH2E-JY2Q].

Data also mirrors a reality that is the product of biases—and that should not be encoded into AI decisionmaking. A ProPublica investigation found that numerous jurisdictions had adopted a risk assessment tool that identified Black defendants as high risk much more often than it did for white defendants because it learned from data with that bias.63Shane, pp. 177–78; see Julia Angwin, Jeff Larson, Surya Mattu & Lauren Kirchner, Machine Bias, ProPublica (May 23, 2016), https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing [perma.cc/G392-3AK6].

While sometimes the bias can be edited out during the training process, it’s not necessarily clear whether the bias was eliminated or merely hidden, nor is it always possible to detect and edit out the bias (Shane, pp. 176–77). Even when trained on data that might appear unbiased, an AI model might result in unintended proxy discrimination.64Prince & Schwarcz, supra note 11.

As Shane observes, “The common thread seems to be that if data comes from humans, it will likely have bias in it” (Shane, p. 174). One issue in viewing AI as a noiseless solution is that training data will likely have bias and noise, and AI models learn from the imperfect data.

2. Learning Issues

AI can also fail when the model has successfully learned how to do what you asked of it, but what you asked isn’t what you were actually hoping to accomplish (Shane, p. 141). AI is prone to solving the wrong problem because it develops its solutions by taking the path of least resistance without knowledge of what humans would have preferred: “It’s really tricky to come up with a goal that the AI isn’t going to accidentally misinterpret” (Shane, p. 142).

Some of these issues can be seen in the ways AI responds to a reward function, which tells the algorithm what problem it should solve (Shane, p. 155). The reward function can be modified to incentivize or disincentivize specific behavior, but the computer still cannot understand the programmer’s intent (Shane, pp. 143, 145). For example, you might train a model to keep a game of Tetris going as long as possible, but its solution is to pause the game indefinitely.65See Shane, pp. 147–48; Tom Murphy VII, The First Level of Super Mario Bros. Is Easy with Lexicographic Orderings and Time Travel . . . After That It Gets a Little Tricky., 2013 SIGBOVIK 112, 130–31.

The computer wasn’t trying to cheat; it just played Tetris like a “very literal-minded toddler[]” given the general instruction of “win the game” (Shane, pp. 147–48).

Another example is algorithmic recommendations, such as those found on YouTube or Facebook.66Shane, pp. 158–60; Sheera Frenkel & Cecilia Kang, An Ugly Truth: Inside Facebook’s Battle for Domination 286 (2021).

Rewarding longer viewer times or more engagement without any regard for the kind of content that gets promoted can draw people into “addictive conspiracy-theory vortexes,” strengthen social biases by suggesting hate groups that engage in real-world violence,67Shane, p. 159; Frenkel & Kang, supra note 66, at 85, 182–85; Will Carless & Michael Corey, To Protect and Slur: Inside Hate Groups on Facebook, Police Officers Trade Racist Memes, Conspiracy Theories and Islamophobia, Reveal (June 14, 2019), https://revealnews.org/article/inside-hate-groups-on-facebook-police-officers-trade-racist-memes-conspiracy-theories-and-islamophobia [perma.cc/N2PM-3MRP].

and contribute to the spread of misinformation.68E.g., Jack Stubbs, Facebook Says Russian Influence Campaign Targeted Left-Wing Voters in U.S., UK, Reuters (Sept. 15, 2020, 4:49 PM), https://www.reuters.com/article/usa-election-facebook-russia/facebook-says-russian-influence-campaign-targeted-left-wing-voters-in-u-s-uk-idUSKBN25S5UC [perma.cc/K7EC-V85G]; Frenkel & Kang, supra note 66, at 169–73, 177–82; Justyna Obiała et al., COVID-19 Misinformation: Accuracy of Articles About Coronavirus Prevention Mostly Shared on Social Media, 10 Health Pol’y & Tech. 182, 183 (2021); Kari Paul, ‘It Let White Supremacists Organize’: The Toxic Legacy of Facebook’s Groups, Guardian (Feb. 4, 2021, 6:00 AM), https://www.theguardian.com/technology/2021/feb/04/facebook-groups-misinformation [perma.cc/C5GM-UA6U].

Although there’s no doubt the use of AI has exacerbated these issues, the problem is not purely a technical one; the corporations and organizations behind the AI models make decisions about what kind of reward functions they want.69See Shane, pp. 159–160 (“Without some form of moral oversight, corporations can sometimes act like AIs with faulty reward functions.”); Frenkel & Kang, supra note 66, at 99–101 (describing how Russian hackers “us[ed] Facebook exactly as it was intended” when connecting with people to promote conspiracies about 2016 presidential candidate Hillary Clinton and how “salacious details” leaked from hacked emails created “clickbait built for Facebook”).

The type of content moderation needed to ensure that AI doesn’t make “bad” recommendations requires skills beyond an AI model’s capabilities, like the ability to understand satire or current events that the training data predates.70See Shane, pp. 25–26.

And if these tasks are too hard for humans to define as “correct” and “incorrect,” that is a sign that AI will have a difficult time solving the actual problem.71See Shane, pp. 213–14.

Take the example with lenient and “average” judges: which judges’ sentences should the AI model treat as “ideal”?

By defining noise as unwanted from the outset, Kahneman, Sibony, and Sunstein fail to appreciate the difficulty of defining a goal for an AI task. Focusing on only unwanted variance makes the consistency of computer decisionmaking tempting, as any reduction in variance looks good from this angle. However, teaching an AI model to distinguish between desirable and undesirable variance and to learn the “right” thing is far from trivial. If using AI to determine criminal sentences, we want some variance in the AI-produced sentences—at a minimum, we would want the sentences to vary by crime. But there is also other variance we certainly do not want—sentences should not fluctuate based on the race of the defendants, for example. In between those easy cases of good and bad variance is a range of harder, value-laden decisions about the “right” kinds of variance.

Understanding what a reward function incentivizes helps with understanding that AI models make predictions, not recommendations: “They’re not telling us what the best decision would be—they’re just learning to predict human behavior” (Shane, p. 180). For example, with the risk assessment tool described earlier,72See supra note 63 and accompanying text.

the company behind the tool stated that predicted risk levels had the same accuracy for Black and white defendants (Shane, p. 177). Although the model accurately predicted a racially biased arrest rate, the algorithm should have been trained to ask “Who is most likely to commit a crime?” rather than “Who is likely to be arrested?” (Shane, pp. 177–78). This disconnect can also be seen as the computer performing a different kind of judgment. For example, choosing job candidates involves resolving tradeoffs between the pros and cons of the two candidates in an evaluative judgment (Kahneman, Sibony and Sunstein, p. 51). For an AI model used in hiring decisions, the task is predicting which person the human would be more likely to hire—a predictive judgment. And because the AI model is predicting human behavior, the improved consistency brought by AI can cause amplification of human tendencies.73See, e.g., Ana Bracic, Shawneequa L. Callier & W. Nicholson Price II, Exclusion Cycles: Reinforcing Disparities in Medicine, 377 Science 1158, 1159 (2022).

Even if the reward function has been defined properly, AI might still find unwanted shortcuts. This is often a problem when building simulations for training AI. Creating a simulation necessitates taking shortcuts because it is impossible to recreate every last detail.74See Shane, p. 162; Kyle Wiggers, Training Autonomous Vehicles Requires More than Simulation, VentureBeat (Feb. 17, 2022, 6:30 AM), https://venturebeat.com/ai/training-autonomous-vehicles-requires-more-than-simulation [perma.cc/JQ6B-7YXD].

If something is not technically forbidden, then it’s fair game for the computer, like pausing a game of Tetris in order to not lose.75See supra note 65 and accompanying text.

Moreover, this can have a similar effect as a lack of data, such as self-driving cars that are easily tricked by stickers because the training simulation had no stickers.76Wiggers, supra note 74.

Here the results might be consistent, but they are also unexpected.

There are at least a couple ways to look at the outcomes of AI learning noisy patterns of judgment. One on hand, AI models that have learned to follow the noisy contours of the underlying training data could be seen as making noisy judgments themselves. This is especially true when overfitting is at play, as a failure to generalize likely accompanies mimicking unwanted variation. On the other hand, one might still consider those models noiseless because they are consistent in producing whatever variation the AI models produce. In that case, the models could be seen as reducing noise at the cost of increasing statistical bias, which contradicts Kahneman, Sibony, and Sunstein’s assumption that statistical bias and noise are independent contributors to error—an assumption that undergirds their overall argument that noise reduction needs to be a priority (Kahneman, Sibony and Sunstein, pp. 64–66).

Although there is a myriad of ways that AI can go wrong, not everything with AI is inherently bad. It can be a powerful tool. AI does offer consistency and the possibility of ignoring, say, sources of occasion noise that are completely irrelevant to the judgment.77See Kahneman, Sibony & Sunstein, p. 133. This comes with the caveat that the occasion noise probably has to be entirely irrelevant to the patterns among the judgments, or else an AI model might learn the pattern through proxies anyway. See supra note 64 and accompanying text.

For example, an AI model designed to predict judgments likely would not have been trained on data that included information about the weather, a source of occasion noise.78See Kahneman, Sibony & Sunstein, pp. 89–90.

And after the model has been deployed, new cases fed to the model for predicted judgments likely would not contain weather information either. And out-of-the-box solutions from AI can be fun. One team of researchers used AI to control two-wheeled robots to see if the model could learn a standard robotics solution for seeking a light source and driving toward it (Shane, p. 152). The AI solution? Spinning in circles toward the light source, which turned out, in many ways, to be a better solution than the textbook one (Shane, p. 152). “Machine learning researchers live for moments like this,” writes Shane, “when the algorithm comes up with a solution that’s both unusual and effective” (Shane, p. 152).

III. Evaluating AI Solutions

One important reason for understanding AI at a more technical level, rather than treating it as a black box, is that we can have more nuanced discussions about how the law should evaluate AI solutions. And when flawed AI systems are already making impactful decisions, such as loan approvals, resume screening, parole determinations, and content consumption (Shane, pp. 3–4), we need to scrutinize how these programs work and why they may not produce the outcomes we aim for. Using Shane’s explanations of AI to break down why Kahneman, Sibony, and Sunstein’s simplistic “AI solves noise” argument doesn’t work can teach us some lessons for how to analyze other AI solutions.

Peeking under the hood shows that AI is far from being free of human influence. Sometimes, and more commonly than you might think, AI systems turn out to actually be humans behind the curtain.79See Shane, p. 210 (citing a 2019 study finding that 40 percent of AI startups in Europe didn’t use any AI); Solon, supra note 23.

For example, a business expense management application claimed to automatically scan receipts, but it actually used humans to transcribe at least some of those receipts.80Solon, supra note 23.

But even aside from these human-powered “AI” systems, the various quirks and failures of AI show that the learning process of AI learns from, well, humans. The human involvement in the development of AI systems is what Kahneman, Sibony, and Sunstein fail to recognize. While the process of the AI model updating its internal flowchart or guessing what letter comes next happens automatically, there is human involvement in many other facets, including the creation of datasets, experimentation in creating the best model, assessment of the model’s efficacy, design for the deployment of an AI system, and deployment into production.81See Awan, supra note 39.

Moreover, there is human involvement when people use trained AI models, such as when creating prompts and curating results.82See, e.g., Jeff Guo & Kenny Malone, AI Podcast 1.0: Rise of The Machines, Planet Money, at 12:52–13:45 (May 26, 2023, 5:42 PM), https://www.npr.org/transcripts/1178290105 [perma.cc/E4S7-8CM9] (finding the need to use incremental prompts with ChatGPT to build the pieces for an episode script); Eric Griffith, Weird New Job Alert: What Is an AI Prompt Engineer?, PCMag (May 22, 2023), https://www.pcmag.com/how-to/what-is-an-ai-prompt-engineer [perma.cc/38RJ-JVCC] (describing the emerging field of “prompt engineering,” the practice of crafting queries to yield better results with AI); see also supra Section II.A (describing how Shane built an AI model to generate knock-knock jokes but needed to identify which joke was both new and funny).

Human involvement means opportunities for humans to make noisy judgments. Human involvement also means some level of human control, which could be a site of regulation and litigation. AI regulation is taking shape around how data and AI can be misused,83See generally François Candelon, Rodolphe Charme di Carlo, Midas De Bondt & Theodoros Evgeniou, AI Regulation Is Coming: How to Prepare for the Inevitable, Harv. Bus. Rev., Sept.–Oct. 2021, at 102, https://hbr.org/2021/09/ai-regulation-is-coming [perma.cc/933N-EM79]; Alex Engler, The EU and U.S. Are Starting to Align on AI Regulation, Brookings (Feb. 1, 2022), https://www.brookings.edu/blog/techtank/2022/02/01/the-eu-and-u-s-are-starting-to-align-on-ai-regulation [perma.cc/Z66S-3Q8R]; Off. of Sci. & Tech., Blueprint for an AI Bill of Rights, White House, https://www.whitehouse.gov/ostp/ai-bill-of-rights [perma.cc/LN2W-N3XF].

and perhaps more can be done around the development of datasets and the testing of AI models before they are deployed.84See Anupam Chander, The Racist Algorithm?, 115 Mich. L. Rev. 1023, 1039 (2017) (reviewing Frank Pasquale, The Black Box Society: The Secret Algorithms That Control Money and Information (2015)).

For example, the choice to use AI in the first place is another human decision in the development process: was it appropriate to make that choice to adopt AI? Going back to the example of using AI for promoting and moderating content, we can see that AI doesn’t absolve people of the responsibility of figuring out what the desired result should be. Facebook’s own moderation efforts, which started out with an American-centric commitment to freedom of expression, face the impossibility of developing a set of rules that reflect the social norms and values of everyone in the world,85Kate Klonick, The Facebook Oversight Board: Creating an Independent Institution to Adjudicate Online Free Expression, 129 Yale L.J. 2418, 2436–37, 2474 (2020).

and AI isn’t a magic solution for such a problem.

Analyzing the training data of AI models can be one part of evaluating an AI system. If the training data was created from empirical data of human judgments, was anything done to try to remove noise or potential red herrings before using the data as a training set? Furthermore, while AI can lead to unpredictable results, there are also foreseeable consequences to choices like what data to include (or not include) in a dataset—it’s not hard to imagine situations that generate unrepresentative data, which in turn could be a source of noise or other issues. For example, if sentencing data from only the harshest judges was used in a training dataset, the AI model would amplify the level noise produced by those judges.

Unrepresentative data can also encode the stable pattern noise and statistical bias that correlates with social bias and discrimination. In medicine, if data from marginalized populations is less available, then an AI system may make inaccurate recommendations for members of those populations.86Sara Gerke, Timo Minssen & Glenn Cohen, Ethical and Legal Challenges of Artificial Intelligence-Driven Healthcare, in Artificial Intelligence in Healthcare 295, 304 (Adam Bohr & Kaveh Memarzadeh eds., 2020); Bracic et al., supra note 73, at 1159.

In law enforcement, historical crime data reflects racist policing practices, and predictive policing algorithms based on such data might choose neighborhoods of color as likely sites of crime, leading to a misallocation of police patrols.87Will Douglas Heaven, Predictive Policing Is Still Racist—Whatever Data It Uses, MIT Tech. Rev. (Feb. 5, 2021), https://www.technologyreview.com/2021/02/05/1017560/predictive-policing-racist-algorithmic-bias-data-crime-predpol [perma.cc/3FVX-ZKDQ]; Chander, supra note 84, at 1025 n.13.

Already, there are efforts from machine learning researchers to be more mindful of potential dangers when releasing new datasets.88See Timnit Gebru et al., Datasheets for Datasets, Commc’ns ACM, Dec. 2021, at 86, https://arxiv.org/pdf/1803.09010.pdf [perma.cc/4XUZ-9LQM]. For an example of this datasheet in use, see Zejiang Shen et al., Multi-LexSum: Real-World Summaries of Civil Rights Lawsuits at Multiple Granularities, 35 Advances Neural Info. Processing Sys. 13158, app. F (2022).

However, businesses that hide their proprietary AI systems as trade secrets to maintain a competitive advantage89See Burrell, supra note 36, at 3–4.

aren’t likely to be proactively forthcoming in the same way. This secrecy also affects transfer learning, as there may not be public access to the original model’s training data.90See Lauren Leffer, Your Personal Information Is Probably Being Used to Train Generative AI Models, Sci. Am. (Oct. 19, 2023), https://www.scientificamerican.com/article/your-personal-information-is-probably-being-used-to-train-generative-ai-models [perma.cc/F7Y7-SENQ]. For example, OpenAI withheld training information about its GPT-4 model, which is available for engineers to tailor to their own uses. See OpenAI, GPT-4 Technical Report (2023), https://arxiv.org/pdf/2303.08774.pdf [perma.cc/6WRA-9XX2]; Models, OpenAI, https://platform.openai.com/docs/models/overview [perma.cc/6P76-TYAN].

The need for large quantities of data in the AI training process91Shane, p. 115. This data hungriness is exacerbated by the rise of neural networks, which perform better than traditional machine learning algorithms on huge quantities of data. See Md Zahangir Alom et al., A State-of-the-Art Survey on Deep Learning Theory and Architectures, Elecs., Mar. 2019, at 1, 7 fig.7, https://www.mdpi.com/422504 [perma.cc/YJQ2-5358].

also raises nontechnical concerns, especially around what entities are collecting data. The nontrivial task of producing useful datasets for use with AI has contributed to the rise of “big data” and the data economy, or the “production, distribution and consumption of digital data.”92 UN Dep’t of Econ. & Soc. Affs., Econ. Analysis & Pol’y Div., Data Economy: Radical Transformation or Dystopia?, Frontier Tech. Q., Jan. 2019, at 1, https://www.un.org/development/desa/dpad/wp-content/uploads/sites/45/publication/FTQ_1_Jan_2019.pdf [perma.cc/B2QS-6ZMB]; see also Shane, p. 44.

Data collection has become ubiquitous—so much so that every second, enough data is generated to fill one billion 200-page books.93 UN Dep’t of Econ. & Soc. Affs., supra note 92, at 2.

This raises concerns around data privacy, as consumers find it increasingly difficult to control how their data is used.94Hanbyul Choi, Jonghwa Park & Yoonhyuk Jung, The Role of Privacy Fatigue in Online Privacy Behavior, 81 Computs. Hum. Behav. 42, 44 (2018); see also Salomé Viljoen, A Relational Theory of Data Governance, 131 Yale L.J. 573, 577 (2021). One avenue developers have taken is called federated learning, which allows user data to be processed on, for example, individual smartphones rather than all together on the same computer. See, e.g., Brendan McMahan & Daniel Ramage, Federated Learning: Collaborative Machine Learning Without Centralized Training Data, Google Rsch.: Blog (Apr. 6, 2017), https://ai.googleblog.com/2017/04/federated-learning-collaborative.html [perma.cc/2XF3-YE9Z].

The power in data also raises antitrust issues around anticompetitive behavior enabled by massive data collection.95See Shane, p. 115; Lina M. Khan, Sources of Tech Platform Power, 2 Geo. L. Tech. Rev. 325, 330–31 (2018).

Techniques like transfer learning may mitigate the issue of having small training datasets, but that doesn’t take away from the value of big data.

Because the data may be shrouded in secrecy, and because it’s often not predictable what an AI model will learn from the training data, another area of human involvement in AI development worth scrutinizing is in the testing of AI systems. When testing AI models, people decide what data to use for testing, what goes into the testing environment, what metrics to use for evaluating the AI system, and what threshold makes a system good enough for deploying into the real world, among other things. For example, someone might evaluate an AI system that makes hiring decisions for overall accuracy or also for performance along racial and gender lines.96See, e.g., Jeffrey Dastin, Amazon Scraps Secret AI Recruiting Tool That Showed Bias Against Women, Reuters (Oct. 10, 2018, 7:04 PM), https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G [perma.cc/VCE7-MVNV].

These choices might signal a lack of care or perhaps even intent by the programmers, and such choices shouldn’t be shielded from scrutiny just because AI has been thrown into the mix.

Moreover, for AI services that organizations and individuals might pay to use, these kinds of tests can also be performed by outside researchers. Utilizing outside researchers provides another approach to scrutinize AI systems without needing access to the underlying data or knowledge about the behind-the-scenes AI development processes.97E.g., Joy Buolamwini & Timnit Gebru, Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification, 81 Proc. Mach. Learning Rsch. 77 (2018); Patrick Grother, Mei Ngan & Kayee Hanaoka, Nat’l Inst. of Standards & Tech., NISTIR 8280, Face Recognition Vendor Test (FRVT) Part 3: Demographic Effects (2019).

For example, in their widely cited Gender Shades paper, Joy Buolamwini and Timnit Gebru tested three commercial models from the likes of Microsoft and IBM that were designed to classify faces by gender and demonstrated how the models performed worse on women and faces with darker skin tones.98Buolamwini & Gebru, supra note 97, at 77, 85, 88; Gender Shades 5th Anniversary, Algorithmic Just. League, https://gs.ajl.org [perma.cc/JM82-V8MY]. Deborah Raji joined Dr. Buolamwini in subsequent research that showed similarly disparate results with Amazon’s software. Gender Shades 5th Anniversary, supra.

Even though software vendors tend to conceal their data, algorithms, and development processes as trade secrets, outside parties can still glean important information by running their own tests. Indeed, Gender Shades drove Microsoft and IBM to pledge to testing their algorithms more thoroughly and diversifying their training datasets.99Abeba Birhane, The Unseen Black Faces of AI Algorithms, 610 Nature 451, 452 (2022).

This research also influenced regulation of algorithms that analyze faces, such as the numerous jurisdictions that have banned the use of facial recognition technology in part because of misidentifications that disproportionately affect communities of color.100Id.; see also Map, Ban Facial Recognition, https://www.banfacialrecognition.com/map [perma.cc/QY54-DV2Z].

Identifying human influence in an AI system is useful both for identifying human behavior that is more familiar to our existing legal frameworks and, combined with an understanding of how AI learns, for figuring out how the law might handle an AI system.

An important facet of analyzing what an AI system is doing and what it can do is understanding reward functions and what behavior is incentivized. If the reward function incentivizes mimicking the results of past human judgments, then the AI models will embed human behavior—both desirable and undesirable—in their internal flowcharts. What thinking about this in the context of noise shows us is that AI can be consistent in amplifying noise or increasing statistical bias, though perhaps this consistency can turn noisy human judgments into a more cognizable pattern or practice because individual discretion has been removed.101Cf., e.g., Wal-Mart Stores, Inc. v. Dukes, 564 U.S. 338 (2011) (failing to find commonality in an employment discrimination class action in part because the plaintiffs could not statistically demonstrate a “common direction” to managers’ exercise of discretion in pay and promotion decisions).

Understanding what AI is and isn’t capable of is also important for avoiding a common pitfall known as automation bias, or the tendency to treat automated decisions as inherently fair or better.102Danielle Keats Citron, Technological Due Process, 85 Wash. U. L. Rev. 1249, 1271–72 (2008). Shane also refers to this as “mathwashing” or “bias laundering.” Shane, p. 181.

Kahneman, Sibony, and Sunstein fall into this trap by focusing on the consistency of automated decisionmaking while ignoring what makes AI unique. And to be fair, automation bias can be tempting because AI predictions may be presented as evaluations, instead of the probabilistic predictions that they are. For example, LLMs confidently assert that they are all-knowing, even as they regularly provide inaccurate answers.103See generally, Maggie Harrison, ChatGPT Is Just an Automated Mansplaining Machine, Futurism (Feb. 8, 2023, 10:40 AM), https://futurism.com/artificial-intelligence-automated-mansplaining-machine [perma.cc/ZF7C-W7X9]; James Romoser, No, Ruth Bader Ginsburg Did Not Dissent in Obergefell — And Other Things ChatGPT Gets Wrong About the Supreme Court, SCOTUSblog (Jan. 26, 2023, 10:57 AM), https://www.scotusblog.com/2023/01/no-ruth-bader-ginsburg-did-not-dissent-in-obergefell-and-other-things-chatgpt-gets-wrong-about-the-supreme-court [perma.cc/C8ZJ-HDSZ].

This ability to generate results that look and sound reliable regardless of accuracy makes it all the more important that AI is used responsibly. Putting too much trust in deceptively attractive AI systems can have real and harmful results.104See, e.g., Vishwam Sankaran, AI Chatbot Taken Down After It Gives ‘Harmful Advice’ to Those with Eating Disorders, Independent (June 1, 2023, 11:18 AM), https://www.independent.co.uk/tech/ai-eating-disorder-harmful-advice-b2349499.html [perma.cc/DPR3-HPVM] (reporting on a nonprofit for eating disorder recovery whose AI chatbot gave harmful advice); Kashmir Hill, Wrongfully Accused by an Algorithm, N.Y. Times (Aug. 3, 2020), https://www.nytimes.com/2020/06/24/technology/facial-recognition-arrest.html [perma.cc/79XZ-JJ84] (reporting on an innocent Black man who was arrested because the police didn’t properly corroborate a facial recognition search result).

Conclusion

Shane shows that understanding how AI works doesn’t have to require a deep understanding of the technical inner workings of computers. And understanding how AI works is what leads to a more informed understanding of what it can accomplish, as well as its limits—something that would have served Kahneman, Sibony, and Sunstein well as they considered the idea of AI as a way to easily reduce noise. Although AI does result in a model that makes predictions without human intervention, the process of developing and testing an AI model includes a lot of human decisions that have consequences on the performance of the AI model. AI can be an incredible tool when used well, but to use it well requires anticipating and addressing the potential points of failure.

* J.D., May 2023, University of Michigan Law School. I am grateful to Book Review Editors Elena Meth and Gabe Chess for this opportunity and for all of their thoughtful feedback during the editing process. Thank you to Professor Nicholson Price and Melanie Subbiah for the thoughtful guidance and insight in giving shape to this piece. And of course, thanks to the Michigan Law Review editors that carried this piece through the production process—in particular, thank you to Derek A. Zeigler, Ross H. Pollack, Dean Farmer, Elana Herbst, and Peter G. VanDyken from the Executive Editors Office. This Notice is dedicated to Professor Andrea Danyluk.