Hassle

Jane Bambauer*

Before police perform a search or seizure, they typically must meet the probable cause or reasonable suspicion standard. Moreover, even if they meet the appropriate standard, their evidence must be individualized to the suspect and cannot rely on purely probabilistic inferences. Scholars and courts have long defended the distinction between individualized and purely probabilistic evidence, but existing theories of individualization fail to articulate principles that are descriptively accurate or normatively desirable. They overlook the only benefit that the individualization requirement can offer: reducing hassle.

Hassle measures the chance that an innocent person will experience a search or seizure. Because some investigation methods meet the relevant suspicion standards but nevertheless impose too many stops and searches on the innocent, courts must have a lever independent from the suspicion standard to constrain the scope of criminal investigations. The individualization requirement has unwittingly performed this function, but not in an optimal way.

Individualization has kept hassle low by entrenching old methods of investigation. Because courts designate practices as individualized when they are costly (for example, gumshoe methods) or lucky (for example, tips), the requirement has confined law enforcement to practices that cannot scale. New investigation methods such as facial-recognition software and pattern-based data mining, by contrast, can scale up law-enforcement activities very quickly. Although these innovations have the potential to increase the accuracy of stops and searches, they may also increase the total number of innocent individuals searched because of the innovations’ speed and cost-effectiveness. By reforming individualization to minimize hassle, courts can enable law-enforcement innovations that are fairer and more accurate than traditional police investigations without increasing burdens on the innocent.

Introduction

A police officer has submitted an application to a magistrate judge requesting warrants to search every dorm room in the Harvard College residence halls for illegal drugs. To establish probable cause, the officer furnished a copy of a recently published study about student life at Harvard. The study is methodologically sound and concludes that 60% of the on-campus dorm rooms contain illicit drugs.

Naturally, the magistrate judge will deny the application, but why? While probable cause is not defined as a precise probability, we are told that the standard sits below “more likely than not.”[1] A 60% likelihood of finding drugs in any given dorm room should easily clear the bar.

The textbook response to this Harvard dorm hypothetical, a variant of a puzzle posed by Orin Kerr,[2] is that the suspicion stemming from a statistical study is not individualized. The evidence is not tailored to each Harvard student whose home is to be searched. The study offers only probabilistic evidence. No matter how great the chances of finding drugs may be, lack of individualization presents a disqualifying flaw.[3]

Individualized suspicion therefore consists of two distinct prongs. The police must have suspicion—a fairly good chance of finding evidence of a crime—and they also must have individualization. This Article explores the second prong, the individualization requirement, in search of a principled distinction between particularized evidence and evidence that is probabilistically sufficient but constitutionally flawed.

The predominant justification for the individualization requirement appeals to a rejection of cold calculations and group-based generalizations, but this appeal falls flat with a little scrutiny. Police and judges must always resort to rough estimates of the conditional probability that a suspect has engaged in crime. Whether police use a collection of details (a partially corroborated tip and unusual travel habits) or just one important detail (a weaving car or a tattoo matching a victim’s description), they ultimately must ask whether the innocent explanations for the observations are much more probable than the illicit ones.[4] Indeed, even vocal opponents of actuarial policing have acknowledged that all suspicion is built from probabilistic inferences.[5]

Moreover, the distinctions that we intuitively draw between individualized and merely mathematical suspicion get the public policy backwards. Because the investigation methods approved by courts usually rely on the observations and perceptions of police, the “particularized” evidence is likely to be biased, error prone, and disproportionately aimed at poor and minority residents living in higher-crime areas.[6] Subjective factors like a suspect’s “nervousness” or “furtive movements” can be imagined or, worse still, manufactured through deceit.[7] And the long, detailed narratives that courts have come to expect from police in order to satisfy particularization requirements are so inconsistent that they risk diluting the suspicion requirement.[8] Individualization seems to be intellectually bankrupt and morally hazardous.

Nevertheless, our fumbling with the notion of individualization performs latent but valuable work. Given that all evidence is probabilistic, the virtue of individualization has little to do with the nature of probabilistic calculations. It doesn’t even have much to do with the particular suspect. Instead, individualization protects everybody else from the potential costs of law-enforcement investigations. The techniques courts and scholars accept as individualized exclude most of the population from the practical likelihood of police intrusions. Because they rely on perceptions of police officers or on happenstance like tips, the traditional methods cannot scale. Actuarial methods can.

This Article argues that the purpose of individualization is to minimize hassle. Hassle is the chance that the police will stop or search an innocent person against his will.[9] After all, while we may not all be Harvard students, we do inevitably engage in activities that predict a high chance of crime. We pace. We circle the block. We travel with bulky or light luggage. And we attend Phish concerts. If the police were able to act on all reasonably predictive statistical models en masse, we would experience an inappropriate and dramatic increase in suspicion-based searches and seizures. The individualization requirement constrains hassle by ensuring that an innocent person is unlikely to be stopped or searched even if he seems suspicious from time to time.

The twin prongs of individualized suspicion—suspicion and individualization—ought to be loosely guided by hit and hassle rates. A hit rate is the probability that a stop or search will uncover evidence of a crime. Hit rates measure suspicion and must meet the relevant standard (reasonable suspicion for Terry stops and probable cause for full-blown searches and seizures).[10] Hassle rates, by contrast, measure the probability that an innocent person within the relevant population will be stopped or searched under the program. Hassle rates speak to individualization. If a program is likely to cause too much hassle, the police have not sufficiently narrowed the scope of the investigation, no matter how high the hit rate may be. Hassle rates keep track of the societal costs of criminal investigations, and hit rates ensure that the costs are justified.

Courts and scholars have already grown accustomed to examining hit rates when data are available.[11] When they are not, courts and scholars have used common sense and experience to estimate the same thing: whether the police had a decent shot of uncovering incriminating evidence during a stop or search. But hit rates alone cannot keep the government in check. Hassle rates are also crucial to the Fourth Amendment’s protection. For rare crimes, like murder, a high hit rate can guarantee a low hassle rate. But for more common crimes, such as drug possession,[12] an additional constraint must curb governmental intrusions. At a higher level of abstraction, hit and hassle perform the delicate balancing of interests that the Fourth Amendment demands.

Hassle explains many of the instincts already embedded in the individualization precedent. For example, it explains the courts’ consistent preference for police narratives chock-full of detail, even when each additional detail does not contribute much to the amount of suspicion.[13]

Hassle can also explain the Harvard dorm room hypothetical. If an officer requests warrants to search the dorms of all 6,000 students living in Harvard residential halls, we know ex ante that approximately 2,400 of them will not have illegal drugs. In one fell swoop, the police will have imposed significant costs on the innocent population in the Harvard and Cambridge communities.

On the other hand, the Harvard dorm room hypothetical also commands attention to a lost opportunity. If the warrant were issued, the Cambridge police could have greater success searching a Harvard student and could create inroads for criminal enforcement within elite communities otherwise immune to the enforcement of minor criminal laws. But the dominant understanding of individualization will push the police out of the Harvard dorms and back into the homes and pockets of the poor, the uneducated, and the traditionally suspect.

Courts and advocates should reform the concept of individualization to focus on minimizing hassle.[14] And they can do so without making significant changes to existing doctrine. This Article explains why and how in three parts.

Part I collects the definitions and justifications for individualization that have floated around the legal scholarship over the last five decades. Individualization has attracted the attention of a long list of distinguished scholars, and with the exception of Fred Schauer, all have vigorously defended the concept.[15] Charles Nesson champions the notion of case-by-case assessment.[16] Laurence Tribe highlights the importance of human intuition.[17] Andrew Taslitz argues that suspicion should be based on conduct under the control of the suspect,[18] and Bernard Harcourt suggests that police should trace suspicion from a crime to a suspect rather than attempt to predict which individuals are criminals.[19] Each of these theories fails to describe actual or desirable outcomes. Nesson’s and Tribe’s theories entrench the discretion of police officers in the teeth of ample evidence of bias and error, while Taslitz’s and Harcourt’s theories put impracticable limits on criminal investigation.

There is a way out. Part II introduces hassle, a concept that operates behind the scenes of the individualization doctrine and deserves attention. Hassle measures how much pain an investigatory program will impose on the innocent even when the program is moderately successful at detecting crime. Even if a new police tool does a very good job of detecting suspicious conduct, if the tool is inexpensive and used with abandon, the hassle it brings to the wrongly suspected should justify Fourth Amendment scrutiny on its own. The concept of hassle will become increasingly valuable in an era of technological change. Indeed, the Foreign Intelligence Surveillance Court reached for the concept of hassle without having a name for it when the court invalidated aspects of the National Security Agency (“NSA”)’s Upstream program.[20] And hassle matches and explains judicial instincts about individualization better than other theories. Although courts are likely to resist defining hassle thresholds in precise statistical terms, hassle provides a badly needed benchmark. It fills the vacuum in individualization’s organizing principles.

Part III explores the implications of hit- and hassle-based individualized suspicion. Not only is a hassle-based individualization requirement more descriptively accurate but it also has desirable normative implications for police practices. It tolerates innovation in policing. It also permits law-enforcement agencies to limit their operations using carefully designed random selection instead of relying on the luck and resource constraints that currently control their scope.[21] As long as a program has a high enough hit rate to satisfy suspicion requirements, the police should be permitted to keep hassle rates low by selecting among suspicious individuals in a mechanical but evenhanded way. For example, suppose new software can analyze video footage from security cameras to detect hand-to-hand drug sales with a high hit rate (that is, most of the time the software alerts, it is correct). Because of the high frequency of this sort of crime, incessant monitoring throughout a city is likely to produce too much hassle. Rather than abandoning the software altogether, the police could reduce hassle by responding only to a randomly selected portion of the alerts. Granted, this means that police will choose arbitrarily among individuals who are equally likely to have committed a crime, but arbitrary selection is more legitimate, less biased, and less prone to manipulation than the organic selection that police use today.

These changes offer some hope of redistributing the costs of fruitless searches from the poor and minority communities that frequently come into contact with police to the wealthier, whiter communities that traditionally have lived above suspicion.[22] This would be a welcome improvement.

I. Individualized Suspicion Amiss

Before conducting a stop, search, or another invasion of a Fourth Amendment interest, the government usually must have sufficient suspicion.[23] This suspicion is measured by the chance that law enforcement will uncover evidence of a crime.

Sometimes the government will have abundant suspicion, as when a police officer observes an illegal assault rifle, or finds a DNA match, or follows an impaired driver as he pulls off the road, climbs up a tree, and yells “I’m an owl!”[24] But the police do not need certainty or anything close to it. They need only to satisfy the probable cause standard in the case of searches, arrests, and exigencies and to satisfy the reasonable suspicion standard in the case of brief stops and limited frisk searches.[25]

The Supreme Court has declined to define these standards with statistical precision, but it has stated that probable cause requires a “fair probability” that evidence of a past or future crime will be uncovered.[26] This standard is lower than the preponderance standard, and it is roughly estimated as a 331/3% chance of recovering evidence of a crime.[27] The reasonable suspicion standard is lower still.[28] Thus, police must have some chance of success before engaging in a search or a stop, but that chance need not be large.

But courts frequently make a further, independent inquiry. In addition to meeting the threshold probability for suspicion, the “belief of guilt must be particularized with respect to the person to be searched or seized.”[29] Thus, it is not enough for law enforcement to have adequate suspicion. Police officers must have particularized, or, as it is often called, individualized suspicion. While courts have applied the individualization rhetoric inconsistently,[30] the requirement is sacrosanct for most criminal-procedure scholars.[31] And yet its purpose remains elusive.

This Part will identify and assess four theories that have attempted to describe and justify the individualization requirement. In order, they are the snowflake theory (each case is unique and cannot be based on generalizations); the felt belief theory (to promote institutional legitimacy, law enforcement and judges actually need to harbor a belief of guilt); the suspicious conduct theory (suspicion should be based on the suspect’s actual behavior); and the crime-out theory (suspicion should build from the crime toward a suspect rather than the other way around).

All of these explanations for the meaning and purpose of individualization make wishful distinctions. They fail to describe actual jurisprudential outcomes and, in any event, are normatively undesirable.

A. The Snowflake Theory

The most natural reading of “individualization” draws a distinction between generalizations and case-specific facts.[32] It demands holistic reasoning—rather than the application of probabilities—so that individuals are judged for who they really are.[33]

Holistic reasoning finds support in a range of contexts from affirmative action to consumer protection.[34] Nesson famously argued that the reasonable doubt standard of proof required for criminal prosecutions “does not lend itself to being expressed in correlative probabilistic terms and, indeed, operates in an environment judicially structured to submerge probabilistic quantification in the factual complexity and uniqueness of specific cases.”[35] Holistic reasoning also incorporates the preference for human intuition celebrated in the Trial by Mathematics literature, in which Tribe and others argue that human intuition has its own irreplaceable wisdom that scientific predictions cannot match.[36]

In the context of criminal investigation, Taslitz has been the most vocal advocate for case-by-case reasoning. He argues that individualization has an important function when criminal suspects present “extraordinary combinations of behaviors and traits” that generalities cannot adequately capture.[37]

Neither Taslitz nor Nesson harbors any illusions that holistic determination is error free. Rather, they argue that justice requires considering combinations of facts unique to the individual beyond what systems of statistical inference can offer. Each case deserves its own evaluation.

The Supreme Court shares some responsibility for this romance with unique cases, as its criminal-procedure jurisprudence emphasizes the importance of case-by-case, fact-specific analysis. Although the Court has approved the use of some formulaic profiles,[38] more often it has insisted that police couch their justifications in ornate descriptions to show that each suspect was chosen for unique and special reasons.

The reasoning of Richards v. Wisconsin[39] is typical. In that case, the Court had to decide whether a police officer could execute an arrest warrant without first knocking on the door and announcing himself if the arrest concerned a drug-related offense. The Fourth Amendment usually requires that police announce themselves when executing an arrest,[40] but if the police have reasonable suspicion that the defendant will destroy evidence or attack the arresting officers, they may forgo the knock-and-announce procedure.[41] The government’s theory was that the disposable nature of drugs made all drug-related arrests good candidates for a per-se knock-and-announce exception.[42] Justice Stevens’s unanimous opinion explained that a categorical rule allowing drug arrests to proceed without a knock and announce would overgeneralize since it would encompass many situations that pose no risk of evidence destruction or violence.[43] By contrast, if the police know that the arrestee is aware of their presence—as was the case for Richards—the suspicion that evidence may be destroyed would be tailored to the situation, and police would be free to overtake the premises without knocking.[44]

Justice Stevens started his opinion by rejecting generalizations, but by the end, he did nothing of the sort. As he applied the law to the facts, he substituted one rule (drug crimes = no-knock exigency) for another (drug crimes + awareness = no-knock exigency).

Put another way, suppose that a drug offender is very likely to dispose of his drugs when the police knock and announce their presence. A rule allowing the police to enter without first knocking is no more mechanical than Justice Stevens’s rule permitting police to enter when one more fact (awareness) is added. Justice Stevens’s rule likely increases the probability that a defendant will attempt to destroy drugs, but this advances the suspicion prong of individualized suspicion, not the individualization prong. As a matter of individualization, Justice Stevens’s rule relies just as heavily on generalizations as the rule he rejects.

Case-by-case reasoning cannot and does not eliminate generalizations. Instead, it forces the generalizations to operate in informal and ad hoc ways. An inevitable result of case-by-case thinking is the creation of squishy factors like “furtive movements” that give the government’s cases a gloss of particularity. These factors are based on in-the-moment, holistic impressions that can absorb any number of details that the police observe.[45] The obligation to generate a narrative distracts from much more important questions, such as whether the government is doing a good job choosing its targets.

Cases can be unique in the sense that they involve one-of-a-kind combinations of factors, but the reasoning of a case cannot be unique. Prediction requires generalization. Whatever is truly unique about a case cannot support an educated guess about its outcome unless it is analogized to some other generalization. The generalizations can be more finely grained by adding variables,[46] but the nature of the prediction does not change. Schauer has it right: “[O]nce we understand that most of the ordinary differences between general and particular decisionmaking are differences of degree and not differences in kind, we become properly skeptical of a widespread but mistaken view that the particular has some sort of natural epistemological or moral primacy over the general.”[47]

It might seem quite unnatural and even immoral to give up on the promise of unique treatment, but one need only think of McCleskey v. Kemp[48] to see the tragic legacy of “case-by-case” thinking.[49] In McCleskey, an African-American man convicted of murder challenged his death sentence using statistical evidence of racial bias.[50] McCleskey’s challenge relied on the Baldus study, an analysis of over two thousand death-eligible murder cases tried in Georgia during the 1970s.[51] The study showed that the races of the victims and murderers were strong determinants of capital sentences even after controlling for dozens of other explanatory variables (such as the method of killing, the victim’s experience before death, the defendant’s prior record, and the number of victims).[52] The Supreme Court accepted the validity of the statistical study, but it nevertheless rejected McCleskey’s challenge because each individual death-row defendant had his own chance, in front of his own jury, to show why his case was unsuitable for capital punishment based “on the particularized nature of the crime and the particularized characteristics of the individual defendant.”[53] This move allowed the Court to sweep glaring patterns of bias under the rug in order to preserve the illusion of case-by-case determination.

McCleskey embedded a misunderstanding of epic proportions into equal-protection law. The Court failed to appreciate the import of the Baldus study. Despite the trappings of a “holistic” process, McCleskey was judged using generalizations. But instead of being judged by the right generalizations (those related to the heinousness of his crime), he was judged by the wrong ones (his race and the race of his victim). By denying that generalizations were used at all in his conviction, the Court was able to avoid accountability. The individualization requirement has allowed the myth of unique cases to pollute the law of criminal investigations as well.

B. The Felt Belief Theory

Nesson’s work rejects purely probabilistic proof, at least in the case of criminal convictions, by arguing that the jury should have an “abiding conviction” that the defendant did it.[54] Jurors should harbor a complete and confident belief, even if past experience and common sense tell us that there is a nonnegligible chance they are wrong. Nesson argues that the mistakes of wholly convinced jurors, even if they are wrong more often than statistically derived determinations of guilt, serve an objective that is different from and sometimes superior to accuracy: final resolution.[55]

Under this theory, judgments coming from wholly convinced judges and juries, flawed as they may be, serve the institutional interests of the courts by presenting the party—particularly the losing party—and the public with a monolithic outcome that leaves little room to doubt the factual findings.[56] This helps preserve the authoritative reputation and popular legitimacy of the judiciary[57] and potentially helps jurors and litigants feel more psychological closure with the matter.[58]

Although Nesson focuses on the reasonable doubt standard required for criminal convictions, the same institutional interests are implicated with legal determinations using lower standards. Indeed, Nesson cites the famous blue bus hypothetical, based on Smith v. Rapid Transit, Inc.,[59] a civil action decided under the much lower preponderance standard. In Smith, the plaintiff suffered injuries when she was forced off the road by a bus barreling toward her at forty miles per hour.[60] To prove her case, the plaintiff offered her own testimony (which, for the purposes of this discussion, we can assume was credible) that the bus was blue—the color of the defendant’s bus fleet. Although the original case opinion did not offer a precise estimate of the chance that somebody else’s blue bus may have been operating on the street at the time of the plaintiff’s accident, the case has bred a sort of legal urban myth that the plaintiff submitted evidence showing that 80% of the blue buses running down that particular street were the defendant’s and 20% were not. Whatever the true probabilities, the action was thrown out for failure to prove the case using more than mere “mathematical chances.”[61] A long line of distinguished scholars insist that this was the correct outcome.[62] Indeed, they still defend the outcome of Smith even if the defendant had been responsible for 99% of the blue buses running down the street.[63] After all, a jury would not be able to quiet the nagging thought that it may have been that other infrequent bus.[64]

The individualization requirement for criminal investigations might promote the same interests in finality and responsibility. Even if human decisionmaking is flawed, perhaps a court should still require that an officer feel certain that a suspect is guilty before stopping and searching him, or at least be able to gut check his decision rather than abdicate his judgment to a statistical process.

Sure enough, Carroll v. United States,[65] an old case frequently quoted for its definition of probable cause, could support a distinction between belief and unenthusiastic assent to mathematical inference. In that case, the Supreme Court explained that an officer must have sufficient information “to warrant a man of prudence and caution in believing that the offense has been committed.”[66] Many cases have made clear that an officer’s subjective belief is not enough without additional objective evidence that would lead a reasonable officer to conclude that crime might be afoot.[67] But these cases do not necessarily deny that subjective belief is a necessary condition for individualized suspicion.

While the felt belief theory and the snowflake theory both lead to rejections of purely statistical evidence, they do so on different grounds. The snowflake theory is built on a fallacy; it assumes, wrongly, that distinctions can be drawn between cases without resorting to a combination of generalizations. Thus, the snowflake theory’s flaws are insurmountable. Case-by-case reasoning simply doesn’t exist. The felt belief theory, on the other hand, does articulate a standard that can be implemented. Individualization would be satisfied if a human can trust the evidence using his own senses and experiences.

Nevertheless, the felt belief theory has three significant and intertwined flaws. First, it is not possible to separate evidence that can lead to a felt belief from evidence that cannot. For example, there is no reason to assume that witness testimony about the operator of the blue bus can move a juror to complete belief but methodologically sound calculations of the chance that the defendant operated the blue bus cannot. The distinction imports somebody’s strong preferences for certain types of evidence, allowing some types of evidence to persuade the juror, judge, or officer to a felt belief while denying other types of evidence the chance to do so.

Second, the theory gets the moral imperative backwards. Nobody—whether juror, magistrate, or police investigator—should maintain his beliefs with full conviction. Indeed, procedural rights and judicial appeals are designed to avoid any delusions of perfection.[68] Since most evidence should leave some doubt in the minds of the decisionmakers, evidence cannot be categorized along the lines that Nesson and others suggest.

Finally, if the felt belief theory rejects all mechanical forms of evidence, the theory runs into paradoxes when such evidence is extremely accurate. Consider the cold hit DNA case, which meets its burden of proof entirely through references to probability. Suppose the government presents evidence that a defendant’s DNA matches a sample from a rape kit across all thirteen tested genetic biomarkers and that this unique combination of markers is likely to occur only one out of 56 billion times. The chance that somebody else in the world (let alone in the country) would share this much of the defendant’s genetic code is negligibly low.[69]

Yet the cold hit DNA case differs from the blue bus hypothetical only in degree. If the plaintiff in Smith could prove that the only blue bus, aside from the defendant’s, drove down Main Street only once every thousand years, her case would rely just as much on probabilities. If pure mathematical proof—divorced from the sorts of evidence that juries can see, hear, and perceive themselves—cannot inspire a felt belief, then cold hit DNA cases would have to fail. But this outcome is intolerable as a practical matter and perverse as a normative matter.

If DNA cases are legitimate, the blue bus hypothetical must be reconsidered, too. There is no reason to allow a plaintiff to win a suit against a bus company that runs only 49% of the city’s blue buses when she supplements her evidence with a barely credible eyewitness while rejecting the suit of a plaintiff who sues a bus company that runs 99% of Main Street’s blue buses.[70] Instead of fretting over the probabilistic nature of evidence, courts should strive to make peace with the doubt that does, and should, accompany all factual determinations in law. After all, the study of quantum physics is rapidly moving toward an understanding of the universe that is driven entirely by probability theory.[71] If the laws of nature are probabilistic, surely the laws of law will have to be as well.

Courts have proven to be much less perplexed by probabilistic evidence than the academic debate would suggest. The Arizona Supreme Court had to decide a case with all the zany qualities of a law school hypothetical: a student at a boarding school contracted a severe case of salmonella from one of between 100 and 120 meals consumed on campus.[72] Every meal but one was prepared by an independent contractor, but that one meal was prepared by the school, and none of the evidence presented could help establish or rule out the school-made meal as the cause of the plaintiff’s illness.[73] Despite the similarities to the famous blue bus hypothetical, the Arizona Supreme Court had no difficulty granting the school’s motion for summary judgment and allowing the case against the contractor to proceed.[74]

In the context of criminal procedure, courts have already accepted investigatory tools that substitute for human judgment. Cold hit DNA matching is one unusually accurate statistical tool, but there are other error-prone tools that have been deemed sufficient for Fourth Amendment standards. For example, the Supreme Court has approved the use of alerts from narcotics-sniffing dogs to establish probable cause to search cars and luggage.[75] Justice Souter strongly disagreed with these decisions partly on the grounds that narcotics dogs make errors.[76] He rightly criticized the Court’s early opinions on the topic for either failing to address canine error or, worse yet, implying that the dogs are infallible.[77] Subsequent cases show that the Court now acknowledges that the dogs make mistakes, but it remains willing to permit their use anyway.[78]

This is sensible. Although narcotics dogs do create false alerts,[79] their hit rates far outperform those of highway patrolmen in predicting which cars contain drugs.[80] They even outperform the success rates of warrant-based home searches—the gold standard for criminal investigation.[81]

For some scholars, however, the dog-sniff cases showcase everything that is wrong with criminal procedure today.[82] Most scholars object for the reasons just discussed—that drug-detecting dogs are prone to error.[83] Others object that dogs can be used in discriminatory ways, which is true enough depending on how or, more importantly, where they are deployed.[84] But this remains a concern for every type of investigation, including those based on human observations and judgments. Moreover, the random error of the dog nose, when it does misfire, is usually free from the prejudices that can influence the (nonrandom) error of human police.

Still, some may be troubled that searches based on dog alerts are not grounded in any evidence of suspicious behavior. This objection will be taken up next.

C. Suspicious Conduct Theories

Taslitz proposes an appealing theory that individualization requires an assessment based on the suspect’s own conduct or behavior.[85] This theory eliminates the injustice of treating individuals differently due to attributes that they cannot manage or alter themselves.

Racial profiling is the motivating example for Taslitz. Although crime rates do vary by race and gender,[86] the variance by race is greatly diminished when socioeconomic conditions are controlled.[87] And since law enforcement has historically given too much weight to race and gender,[88] a prophylactic prohibition on using those factors in criminality profiles helpfully pushes law enforcement to use other, more predictive factors to determine reasonable suspicion and probable cause.

But the conduct theory of individualization falls apart outside the context of racial profiling. While the conduct theory matches some of the classic means of building suspicion—casing a store[89] or looking around nervously[90]—it cannot explain large swaths of criminal procedure.[91] Police are allowed to stop and search some people who have not exhibited any odd behaviors. Take, for example, the unlucky lot whose cars are searched in response to a false alert from a narcotics-sniffing dog. Unless they were carrying a trunkful of Snausages, these individuals’ conduct played no role in the police’s decision to search. Similarly, individuals matching a description from a victim or witness cannot alter their behavior to avoid suspicion. And the subjects of tips form another class of searched individuals whose suspicion was not necessarily based on conduct.[92] Like the targets of a mistaken dog nose, these subjects will be unable to alter their behavior to avoid the stop.

Even if it were possible to reconfigure individualized suspicion so that it conformed to a conduct-driven rule, it would be unwise to do so. First, the line between conduct and attribute is difficult to manage. For example, is a person’s location a form of conduct? If so, this detracts from the rule’s appeal. Surely many people who live in or travel through “high-crime” neighborhoods would avoid those areas if they could. And what about the Harvard students living in a dormitory? Attending college is a choice. Would the conduct rule allow the officer in the Article’s introductory hypothetical to obtain a warrant to search all Harvard dormitories? The line between conduct and nonconduct is inadministrable.

Moreover, even if the rule were administrable, requiring suspicion to arise from observed conduct alone would displace some of the more reliable gauges for suspicion (DNA matching, dogs, tips from reliable informants) and would consequently put more pressure on some of the less reliable profiles for crime (nervousness, flight, style of dress). This shift in focus could exacerbate the already lopsided distribution of law-enforcement costs on the poor.

D. Crime-Out Investigations

A final theory for individualization rejects law-enforcement investigations that go out in search of suspicious people in favor of investigations stemming from an already committed crime. Harcourt’s book Against Prediction warns against actuarial law-enforcement methods that attempt to sort out suspicious people.[93] He objects in particular to predictions of criminal personality.[94] Profiles developed to identify a segment of the population that is more likely to commit crime bear an unnerving resemblance to past eugenics movements.[95] Probabilistic inferences that stem from the scene of a particular crime, by contrast, can avoid these uncomfortable similarities. As long as police departments work outward from a particular crime rather than surveying the public and looking for suspicious types, we can be confident that suspicion is growing from the facts of a particular, individual case.

In other words, we might distinguish “crime-out” investigations from “suspect-in” investigations. But while this distinction is useful,[96] it cannot do all the constitutional work here. The scope of suspect-in investigations includes many sound and sanctioned practices. To give just one example, the conduct that caught Officer McFadden’s eye in Terry v. Ohio led to a suspect-in investigation. Terry was casing a men’s clothing store, pacing in front of it and conferring with his conspirator. McFadden’s observations of this behavior aroused his suspicion even though he was not following the leads from an already committed crime.[97]

McFadden’s decision to stop and question Terry was sufficiently particularized for the Court.[98] What McFadden observed is a useful and often deployed actuarial profile: people who pace in front of a building, paying attention to its details, might be plotting a robbery. Of course, the profile is not perfect—chronic pacers, window shoppers, and architectural enthusiasts may be lumped in with the robbers. But courts have found that this type of evidence is sufficiently individualized.

Moreover, the Terry case points to a larger problem with Harcourt’s definition: adhering to the crime-out mode of individualization would wipe out most of the opportunities for police to thwart attempted criminal activity.[99] It is also of limited value for underreported crimes. Crime-out investigations only begin once a crime has been committed and the authorities know that it has been committed.[100] The scope is much too narrow for a constitutional limit on investigation.

E. Ending the Status Quo

Existing theories of individualization fail to provide a satisfying account of its purpose. In practice, the doctrine has served only to ossify the familiar methods and tools, thereby preserving the status quo for no principled reason. Supreme Court precedents place great emphasis on the narrative of the experienced policeman,[101] and as a result the most respected sources of individualized suspicion are the least reliable and the least fair.

An officer’s testimony about what he or she observed is prone to misjudgment or even outright deceit (a practice common enough to have inspired a cute nickname—“testilying”[102]). And apart from perjury, judges expect officers to use squishy, subjective factors like furtive movements,[103] suspicious bulges,[104] the officer’s training and experience,[105] “surveillance-conscious behavior,” and “high-crime areas” to build up suspicion in a particularized way, despite ample evidence that these factors are poor predictors of criminal activity.[106] While such evidence seems to satisfy courts’ desire for individualization, the evidence does not reliably increase the chance that the target is engaged in crime.[107] Thus, current attempts at individualization do worse than nothing. They corrode the suspicion standards by allowing an officer’s unscientific opinions to guide predictions of crime.

It gets worse. Traditional routes to individualization distribute their intrusions in severely regressive ways. It’s no secret that discretion- and observation-driven policing lead to more searches of poor and minority subjects.[108] This is at least partially a result of where police spend their time. But the accumulation of recent Fourth Amendment rules has not helped. The upper classes can afford copious curtilage,[109] usually hang out in “low-crime areas,”[110] and may wear form-fitting bulgeless clothing more often.[111] Thus, poor and minority communities serve a disproportionate share of the prison time for minor drug convictions, despite having drug-usage rates similar to those of upper-class and white communities.[112] In contrast, algorithms are more likely to cast their cold accusations on everybody.

The unavoidable conclusion is that individualization, when courts have insisted on it, has permitted a high degree of error to infect the criminal-investigation process. Justifications for the individualization requirement have spared the courts the embarrassment of rejecting long-trusted sources of suspicion, but so far existing theories of individualization have offered very little to justify its exalted place in constitutional law.[113]

II. Hassle

The individualization half of “individualized suspicion” is in trouble. Scholars who defend it cannot articulate which types of criminal investigations should be considered unconstitutional despite their high likelihood of success. And yet the concept has irrefutable magnetism.

This Part proposes a new understanding of the individualization requirement. Courts and scholars have focused exclusively on the particulars of the stopped or searched suspect. They ask, “Why her?” This is the wrong question. More precisely, the suspicion layer of Fourth Amendment protection already addresses this question. Why her? Because she lives in a Harvard dorm room, so there is a 60% chance that she has narcotics.

Instead, the individualization inquiry should ask, “Why not everybody else?” After all, while we do not all live in Harvard dorm rooms, we do all wind up, at some point, in circumstances in which our benign behavior may legitimately pique law-enforcement suspicion. We stand on corners, and look over our shoulders, and purchase Bob Marley CDs. The Fourth Amendment must offer some assurance that most of the time, most of us will be excluded from stops and searches even though we pass through these temporary states of heightened suspicion.

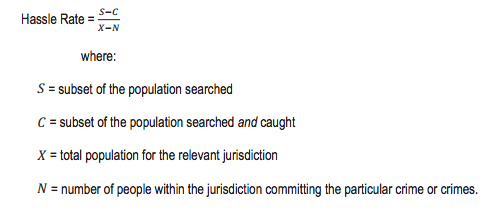

This Part identifies the unsung virtues of individualization. If “suspicion” can be summarized mathematically as a hit rate (the chance that evidence will be discovered in the course of a stop or search), individualization can be captured as a hassle rate: the chance that an innocent person will have to undergo a stop or search.

Section II.A provides a descriptive model that captures in a nutshell how well a given criminal-investigation program is working. Section II.B then establishes the connection between hassle rates and individualization. The individualization practices routinely endorsed in case law and scholarship accomplish the goal of limiting how many other people the police can practicably search. This reduces hassle on the innocent people. Moreover, although discussions of individualization have historically focused on the searched or stopped suspect, the interest in excluding others has quietly guided the reasoning. Some courts have explicitly considered hassle without quite knowing how it fits into the Fourth Amendment jurisprudence. Finally, Section II.C concludes by showing that understanding individualization in terms of hassle explains many other instincts that have circulated in the individualized-suspicion case law.

By renovating individualization consciously to minimize hassle, courts can transparently address problems that have been latent motivators in probable-cause and reasonable-suspicion cases. At the very least, renovating the concept of individualization gives it something useful to do while satisfying a demand for a type of justice that has not yet found a home in the Fourth Amendment’s protection.

A. A Compact Model for Criminal Investigations

A criminal-investigation program can be summarized using four statistics: the base rate, the hit rate, the miss rate, and the hassle rate.

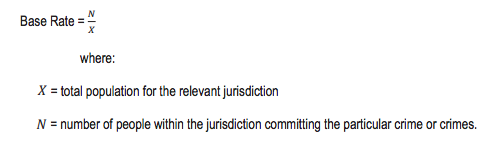

The base rate is the rate at which crime is committed.

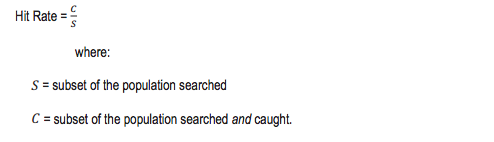

The hit rate is the proportion of searched individuals who are caught with contraband or evidence of a crime.[114]

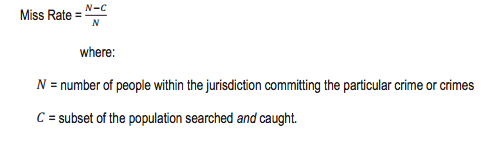

The miss rate reflects the proportion of criminals who are not caught.

The miss rate reflects the proportion of criminals who are not caught.

And finally, the hassle rate shows the proportion of the innocent population who are searched fruitlessly.

And finally, the hassle rate shows the proportion of the innocent population who are searched fruitlessly.

Each of these figures will vary by crime, program, jurisdiction, time frame, and population. And we will often lack the data needed to calculate each of the statistics with precision. This is especially true for miss rates and base rates since, by definition, criminals who are not detected by law enforcement cannot appear in police data. But taken together, rough estimates of these statistics form a thumbnail sketch of the costs of criminal-investigation processes. In fact, estimates of any three will suffice since the fourth can be derived from the others.[115]

Each of these figures will vary by crime, program, jurisdiction, time frame, and population. And we will often lack the data needed to calculate each of the statistics with precision. This is especially true for miss rates and base rates since, by definition, criminals who are not detected by law enforcement cannot appear in police data. But taken together, rough estimates of these statistics form a thumbnail sketch of the costs of criminal-investigation processes. In fact, estimates of any three will suffice since the fourth can be derived from the others.[115]

An optimal criminal-investigation system will have low base rates, miss rates, and hassle rates, and high hit rates. Ideally these rates will not vary dramatically across race and class. But the incentives to optimize the scale and distribution of these rates come from varying sources of law.

Base rates are not in the government’s direct control because they measure the criminal behavior of the population. We depend on political processes to create policies that will deter the commission of future crime. Since crime deterrence is a majoritarian interest, the incentives do not have or need constitutional reinforcement. The Fourth Amendment is also agnostic about miss rates, again leaving it to political processes to create the right incentives for detection.[116] It is worth noting, though, that disparities in miss rates across race (and other constitutionally protected classes) could implicate the Fourth Amendment or the Equal Protection Clause if race is used to select a population for further investigation and screening.[117]

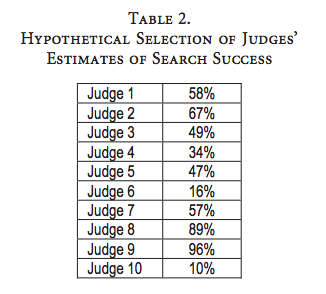

Hit rates are a different matter. The Fourth Amendment keeps a watchful eye on them through the suspicion standards (either probable cause or reasonable suspicion). Because many jurisdictions have monitoring and record-keeping requirements as a result of prior consent decrees,[118] there are some fairly good data on the hit rates for a smattering of programs. In the late 1990s, Maryland’s highway patrol successfully recovered contraband in over half of the warrantless car searches it conducted based on probable cause (as opposed to less fruitful searches conducted with the consent of the drivers).[119] Other jurisdictions didn’t perform quite as well but still cleared the 331/3% hurdle.[120] In contrast, a district court found that New York’s stop-and-frisk program was unconstitutional partly because of its low hit rate.[121] Hit rates are useful signals for the adequacy (or not) of police methods that attempt to single out suspicious behavior. But hit rates cannot alone capture the costs to society and the people in it.

The hassle rate is critical.[122] The word “hassle” will at times understate the intrusion and disrespect that can characterize stops and searches (particularly when the use of force is involved[123]), but the virtue of the term is that “hassle” does not exaggerate the problem. Sometimes, hassle is all that it is. A police officer may briefly detain a person to ask a few questions and, when the misunderstanding is resolved, all parties may go on their way having suffered only mild irritation. When a search is unusually intrusive, courts will require additional suspicion.[124] When a search is really intrusive, courts will invalidate it on substantive due process grounds.[125] But courts have not been in the habit of paying close attention to the typical stops and searches.

These ordinary stops and searches have significant costs. When the experience of a typical member of a community involves involuntary stops or searches, it is natural for the community to question whether the government has overreached its authority. And when an innocent person is stopped more than once in a short time, the effects are much more severe. Repeated police stops are likely to whittle away a person’s sense of democratic belonging and trust. At best, the community will come to regard police presence as a mixed blessing.[126] In essence, most people are willing to endure the inconvenience of a stop or search despite their innocence, but only if the hassle is infrequent.

B. The Importance of Hassle

Hassle is a problem of constitutional importance. If suspicion requirements ensure that the hit rate stays high enough, the individualization requirements should ensure that the hassle rates stay low enough. This simple insight unlocks the motivation behind the requirement of individualized suspicion. Individualization measures the societal costs of a law-enforcement program, and suspicion measures its justification. Both are crucial, and they depend on one another to cabin law enforcement appropriately. This Section explores the theoretical and practical importance of hassle to Fourth Amendment interests.

A constitutionally sound hit rate reveals nothing about a program’s hassle rate until we know the base rate for the crime and the miss rate for the program.[127] An example will illustrate the point. Suppose the Boston Police Department develops a profile to detect a particular crime, and the profile has a 331/3% hit rate. When the profile identifies a suspect, two out of three times it is wrong, and the search is fruitless.[128] If the crime is quite rare (for example, murder), then the hassle rate is guaranteed to be low as long as the hit rate is respectable (as it is here). In 2011, only 403 murders occurred in the entire city of Boston.[129] Even if, by some miracle, the profile managed to detect every murder (a miss rate of 0), the false positives would have affected only 806 people. In a city of nearly 640,000 people,[130] this works out to a hassle rate of 0.13%: that is, a 0.13% chance that an innocent person would be questioned or searched in connection with a murder. Put another way, out of 100,000 innocent people, a maximum of 126 innocent individuals would be stopped or searched. Again, this assumes that every murder is detected—it represents an upper bound for hassle. I suspect most people would be willing to take these odds of having to undergo a stop or search if it meant that the police department were able to detect every last murderer.

By contrast, if the crime is quite common, such as theft, then even a respectable hit rate cannot guarantee a low hassle rate unless it also happens to have a high miss rate. In 2011, there were just under 88,000 thefts in Boston.[131] Let’s assume again that the profile has a 331/3% hit rate—one-third of the suspects identified turn out to be thieves. If the algorithm were deployed over the entire city and managed to detect all of the 90,000 thieves, it would have caused an additional 180,000 false-positive stops or searches in the process. That is a lot of hassle for a city of Boston’s size. If the algorithm avoided searching the same innocent person more than once, the average Bostonian would face a 28% chance of being stopped or searched in the course of the year.[132]

Still, even for theft, a profile with a 331/3% hit rate could be used without reaching these astronomical hassle rates. The police department could keep the hassle rate low by using the profile sparingly—that is, by using it to identify suspects less often. Or the profile itself may keep the hassle rate low if it regularly fails to alert, even in the presence of thieves. But these are dynamics that often go unnoticed by courts, which have so far focused on ensuring only that hit rates are high enough. By considering hit rates alone, courts risk accepting investigation methods with high hassle rates and rejecting methods with low ones.

The hassle is potentially much worse under the more lenient Terry standard for stops and frisks, which requires only a reasonable suspicion of criminal conduct and officer danger rather than probable cause.[133] Since Terry stops are used to detect a wide range of offenses, some of which are quite common (high base rates), a program that satisfies the reasonable suspicion standard could cause a good deal of pain and grief, as measured by hassle.

Two snapshots of New York City demonstrate the problem. During a two-year period from 1997 to 1998, the Street Crime Unit of the New York Police Department (“NYPD”) stopped 45,000 people based on reasonable suspicion.[134] These stops resulted in 9,500 arrests—a hit rate of 21%.[135] The rest—the other 35,500—were false alerts. In absolute terms, this looks like a lot of stops, but in a city of over 7 million people (at the time),[136] the hassle rate was actually quite low. Assuming that each stopped suspect was unique,[137] the average New Yorker had only a 0.5% chance of being stopped during the two years. Of course, the chance of being stopped was not actually distributed evenly across society, so the hassle rate even in the late 1990s may have been disproportionate for some precincts and for some groups defined by race and gender. For now, let’s put aside these equitable distribution problems. We will return to them shortly.

Contrast the 1997–1998 hassle rates with the rates that developed in 2010–2011, at the height of NYPD’s controversial stop-and-frisk program.[138] During those two years, New York police conducted nearly 1.3 million stops.[139] The city’s population had grown to nearly 8.3 million by that time,[140] so if each stopped suspect was unique (and of course the suspects were not—some were stopped more than once), the chance that an average New Yorker would be stopped during the two-year period was over 16%.[141]

The magnitude of the stop-and-frisk program, and the fact that the vast majority of stops were fruitless intrusions on the innocent, convinced Judge Scheindlin to find the program unconstitutional in Floyd v. City of New York.[142] The program had several Fourth and Fourteenth Amendment infirmities. The absence of sufficient suspicion was one of them. (Only 6% of the stops resulted in an arrest, a rate that was too low even under the more permissive Terry standard.[143]) But one of the most serious constitutional flaws, and the first detail mentioned in Judge Scheindlin’s decision, was the astronomical number of stops conducted under the program. The great amount of hassle that the searches created was as concerning to Judge Scheindlin as the low level of suspicion supporting them.

C. Individualization Reduces Hassle

The last Section explained why courts should monitor hassle as a Fourth Amendment interest separate from suspicion. This Section demonstrates that they already do. Although courts and scholars rarely make explicit reference to the concept of hassle, the practices that pass the mysterious mandate for individualization nonetheless have the effect of reducing hassle rates.[144] They do so by using natural limits on how many individuals a law-enforcement agency can investigate at one time. Informant and witness information is difficult to investigate. Suspicious bulges usually go unnoticed unless a police officer happens to be nearby. And police dogs are not so numerous that they can be used everywhere at once. A police unit cannot practicably expand these old practices to investigate large swaths of the population, and these resource limitations keep the hassle rates down. Although the connection to hassle is subconscious, it is not coincidental.

In the course of considering whether the government had sufficient suspicion to stop or search a suspect, courts often incorporate an analysis of hassle. Consider the case Reid v. Georgia,[145] in which the Supreme Court decided that a Drug Enforcement Administration (“DEA”) officer could not stop a drug-courier suspect in an airport based on the facts that the suspect (1) arrived from Fort Lauderdale, a city known to be a source for cocaine distribution; (2) arrived early in the morning; (3) appeared to be avoiding the perception that he was traveling with his companion; and (4) arrived with no luggage other than a shoulder bag.[146] The Court concluded that this combination of factors could not support reasonable suspicion because the circumstances “describe a very large category of presumably innocent travelers, who would be subject to virtually random seizures were the Court to conclude that as little foundation as there was in this case could justify a seizure.”[147]

This analysis touches on both hit and hassle rates. The conclusion that the combined factors would amount to “virtually random” selection shows that the Court questioned whether this profile had a better-than-random hit rate. This inquiry goes to the suspicion requirement. Reasonable minds could differ on whether the Court got this right—the third and fourth factors may increase a hit rate more than the Court cared to acknowledge. But the Court was quite clearly animated by hassle as well. The justices were concerned that the profile failed to exclude enough “presumably innocent travelers” who could have been swept up by the profile that the DEA agent employed.[148] This concern would persist even if the profile did have a decent enough hit rate to satisfy the suspicion requirements.

Reid is not unusual. Courts frequently blend and blur the Fourth Amendment interests in suspicion and hassle, treating them as a single goal. One court explained the reasonable suspicion requirement by rhetorically asking, “[I]s it not better to frustrate the prosecution of an individual who may be guilty so that innocent citizens need not be fearful of a police stop and frisk under the circumstances here?”[149] And the Fourth Circuit Court of Appeals at one point had worked its way precisely to the notion of hassle rates. Describing what is required for using drug-courier profiles, the court said that “[t]he articulated factors together must serve to eliminate a substantial portion of innocent travelers before the requirement of reasonable suspicion will be satisfied.”[150] The Fourth Circuit did not recognize the radical nature of its approach. It was breaking the tradition of focusing on the defendant’s facts and instead looking at an investigation’s effects on everybody else.[151]

Jurists and scholars have not needed to disaggregate the suspicion and hassle issues because, until recently, most programs with decent hit rates were labor intensive and therefore had low hassle rates. But as policing tools become more sophisticated, enabling law enforcement to use computing power to sift through large amounts of digital information, these concepts will begin to diverge.

The separate and independent importance of hassle rates is on spectacular display in the context of the NSA’s surveillance programs. In the wake of the Edward Snowden leaks, the White House unsealed a Foreign Intelligence Surveillance Court opinion from a 2011 case decided by Judge Bates.[152] This opinion is the first decision known to have invalidated aspects of the PRISM/Upstream program on constitutional as well as statutory grounds. The reasons were compatible with hassle.

Judge Bates wrote the opinion in response to new information about the scale of the NSA’s Upstream program, under which the federal government directly collects information on Internet transactions (as opposed to obtaining transaction information indirectly from third parties, as the government does under the PRISM program).[153] The NSA was required under the Foreign Intelligence Surveillance Act of 1978 (“FISA”) to use responsible means to “target” its information gathering at foreign communications and to minimize the collection or retention of any purely domestic communications unintentionally obtained in the process.[154]

When the FISA court learned that the NSA was not able to eliminate the chance of collecting purely domestic communications, the court undertook its own study of a random sample of collected conversations in order to assess independently the NSA’s filtering error. The court discovered that the filter’s error rate was very low—only 0.197% of the collected communications were purely domestic. But because the NSA collected so many conversations (nearly 12 million in a six-month period), the error was great in absolute terms. The court inferred from its independent assessment that the NSA collected between 48,000 and 56,000 purely domestic communications each year.[155]

The government predictably argued that the FISA court should be satisfied with the filter’s hit rate since well over 99% of the collected communications involved a foreign party. The court responded, “That is true enough, given the enormous volume of Internet transactions acquired by NSA through its upstream collection . . . . But the number is small only in that relative sense.”[156] Because the government overcollected domestic communications in an absolute sense and because it failed to implement reasonable minimization procedures, the FISA court held that the Upstream program violated the Fourth Amendment.[157]

Although the national-security context removes this case from the street-level Fourth Amendment setting, the reasoning in the case foreshadows future litigation over law-enforcement technology. The mechanical nature of the NSA’s filter did not put off the court. But the filter’s hit rate proved insufficient on its own to justify the government’s action. That a large number of individuals’ conversations were accidentally swept into the search was also an important factor. This measured the hassle of Upstream.[158] And it was enough, despite the very high hit rate, to raise constitutional concerns.

Courts have revealed a need and desire to consider hassle rates as a variable distinct from hit rates (which are already promoted by the Fourth Amendment’s suspicion requirement). Since existing determinations of individualization correspond closely with practices that reduce the hassle rate, all that remains is tying the strands together. Individualization attempts to reduce hassle.

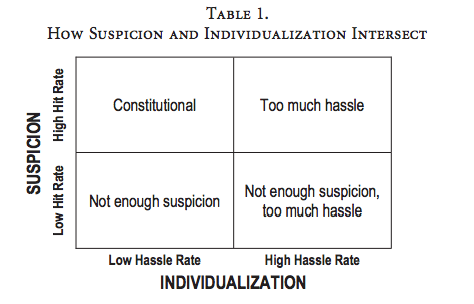

When a court considers the constitutionality of law enforcement’s individualized suspicion, it should ensure that the government’s investigative technique has a high enough hit rate to meet the suspicion standard and a low enough hassle rate to meet the individualization standard. Depending on its facts, a program would fall in one of the four quadrants in the following table:

The table can come to life with a few illustrations. NYPD’s stop-and-frisk program was unconstitutional because it operated without sufficient suspicion and with too much hassle. It would fall in the lower right quadrant.

An anonymous and uncorroborated tip, by contrast, would fall in the lower left quadrant. It would not cause a lot of hassle, but it fails the suspicion element since case law requires some corroboration or another objective reason to credit a tip’s accuracy.[159]

The upper left quadrant marks the constitutional ideal. These police investigations operate with enough suspicion to instill trust in the program and enough constraint to promise a low impact on the liberty of the average person. Corroborated tips and prudent use of Terry stops belong here.

The last quadrant, the upper right, will gain importance as some policing practices become automated. Big-data analytics may be able to meet the suspicion requirements, but if they operate on a large scale, they can quickly increase the number of fruitless searches and seizures.[160] Without constraints, data analytics can violate hassle thresholds even if their hit rates satisfy the suspicion element.

For example, if a pattern-based data-mining program detects copyright infringement with a 90% hit rate, the suspicion requirement would be satisfied. But its use may nevertheless fail the individualization requirement. Copyright law is violated so frequently that the relatively rare misfire could result in hundreds of thousands of futile investigations.[161] If the analysis of individualization tunes into hassle, courts will be well equipped to identify constitutional problems with large-scale data-analytics programs without gutting the innovations entirely. The implications of hassle-driven individualized suspicion are explored in Part III.

Before we turn to implications, however, we will first explore how hassle explains some otherwise inexplicable patterns in Fourth Amendment case law. Even though courts have not referred often to hassle-style problems, there is ample evidence that they have been striving to reduce hassle all along. The indirect influence of hassle is considered next.

D. Other Instincts Explained

Courts sense the need to keep track of hassle, although they have not made a direct connection between hassle and individualization. This Section shows that linking individualization with hassle goes a long way toward explaining some otherwise curious jurisprudence. Indeed, the concept of hassle is legitimated by its subconscious application in the case law. First, hassle explains why courts sometimes react unfavorably to searches based on odd, but technically legal, behavior, while at other times they do not. Second, hassle explains why courts prefer profiles with many factors. Third, hassle offers another reason courts are unwilling to define probable cause and reasonable suspicion using precise probabilities. And finally, hassle establishes a concrete Fourth Amendment interest through which courts can demand distributional justice. Empirical analyses of racial bias in law enforcement have already exposed the disparate rate of stops and searches across races. Hassle gives these findings a home in Fourth Amendment doctrine.

1. Odd but Legal Behavior

In People v. Parker, a police officer suspected that a student who walked into a public school building, looked at the metal detector, and turned around to leave the building had a weapon.[162] The Illinois Appellate Court ruled that the officer lacked particularized suspicion because the student “could have just turned around and gone home for any number of reasons, being sick, forgot something, forgot his lunch, forgot his books, forgot his homework or what have you.”[163] But just as important as what the Parker court said is what it did not say. The court did not suggest that the police officer’s stop and search were unlikely to produce a gun. It didn’t even say that these innocent explanations were more likely than the guilty explanations to justify the student’s behaviors.[164] For its part, the Pennsylvania Supreme Court used similar reasoning when it decided that a hand-to-hand trade of money for a “small object” occurring late at night in a tough part of North Philadelphia could not support probable cause.[165]

These cases seem to conflict with Supreme Court precedents like Illinois v. Gates[166] and Illinois v. Wardlow,[167] both of which involved odd but legal behavior. Gates involved odd travel behavior that corroborated an anonymous tip.[168] And in Wardlow, a young man’s flight in reaction to the arrival of police was enough to justify an individualized suspicion even though some members of the Court acknowledged that there are innocent explanations for flight.[169]

What explains courts’ willingness to accept innocent but odd behaviors as a basis for suspicion in some cases but reject them in others? It cannot be the mere possibility of innocent explanations. Those existed for both sets of cases.

Hassle can explain these cases’ seemingly schizophrenic outcomes. When police must rely on a tip or when they must be physically present to provoke flight, the investigation methods simply cannot scale up. Physical presence is costly, and tips are lucky. Even if a significant proportion of a jurisdiction’s population would flee at the sight of policemen or engage in bizarre travel behavior, in order to take advantage of the case law, the police actually have to receive a tip or be physically present. But they cannot possibly show up to every public gathering of all youths who would decide to flee, and they will not receive tips about most of the people who engage in strange travel behavior.[170]

Hassle rates may be driving these decisions where the government does not rely on some external limiting factor like a tip or a person’s reaction to the physical presence of police. Circling a block and emerging with a bag is unusual behavior, to be sure. But we would not want everybody who circles a block and emerges with a bag of souvenirs, or who turns around at a metal detector because he forgot his homework, to become subject to a stop or search. Without something fortuitous like a tip, there is no external constraint on a police department that uses new technology to increase the number of stops or searches based on similar facts.[171]

2. A Preference for More Factors

When assessing an officer’s decision to stop or search somebody, courts prefer to receive a long list of reasons justifying the decision. The more reasons the agent can recount, the better.[172] This preference, too, can be explained by the invisible influence of hassle.

Consider drug-courier profiles. The Supreme Court condoned a DEA agent’s decision to stop a man named Andrew Sokolow in the Honolulu airport as he returned from a trip to Miami.[173] The agent suspected that Sokolow was carrying drugs because he (1) paid $2,100 for airplane tickets in cash; (2) traveled under a name that did not match the records for the telephone number he had provided; (3) came from Miami, “a source city for illicit drugs”; (4) stayed in Miami only forty-eight hours, even though he spent over twenty hours in an airplane to get between Miami and Honolulu; (5) appeared nervous; and (6) checked no luggage.[174] The Court recognized that each one of these observations could have an innocent explanation but noted that, when taken together, these noncriminal acts could contribute to a “degree of suspicion” that surpasses the reasonable suspicion standard.[175]

The Court’s reasoning is straightforward enough. The chance that a person flying out of Miami has narcotics on him is not very high. And the chance that a person flying with no checked luggage has narcotics on him is also not very high. But the odds increase when the person flies out of Miami and has no luggage. As more and more factors are added to the Venn diagram, the hit rate may continue to increase, and at some point the probability in the intersection will surpass the reasonable suspicion threshold.

This all seems perfectly reasonable, but given the low bar for reasonable suspicion, it is not clear that the DEA agent needed more than one or two of the factors to meet the threshold. The fact that Sokolow flew twenty hours and stayed in Miami for only two days may have on its own increased the chance of his carrying drugs to a figure that clears the suspicion threshold. And if that fact alone didn’t do it, perhaps because business travelers often fly long distances for short stays, adding just one more fact—that he paid for his ticket in cash—would have helped confirm that Sokolow was not a business traveler.[176] These two factors would have easily cleared the hit-rate hurdle, leaving us to wonder what work the others are doing.[177]

Hassle helps explain why courts would prefer to see more factors than are necessary in order to clear the suspicion hurdle, even if the marginal returns on accuracy are negligible. Adding factors to the Venn diagram has an exclusionary effect. Each factor has the potential to exclude a swath of the population from the possibility of a search or seizure. Courts are reassured by longer lists of justifications because these lists roughly signal that the agent’s model cannot scale to a large number of people, many of whom may be innocent.

At their best, long lists of factors improve hit rates and decrease hassle rates at the same time.[178] As each factor contributes to the program’s accuracy, it also constrains the program’s scope. But some of the most frequently used suspicion factors appear to increase accuracy and constrain scope without actually doing so.[179]

3. Undefined Suspicion Standards

Hassle can also explain why the Supreme Court has avoided giving mathematical precision to the probable cause and reasonable suspicion standards. The Court has declared that “[t]he probable-cause standard is incapable of precise definition or quantification into percentages because it deals with probabilities and depends on the totality of the circumstances.”[180] This particular explanation is immensely unsatisfying. The Court has attracted a lot of criticism for refusing to give a probability threshold for the probable cause and reasonable suspicion standards.[181]

But some scholars have come to the Supreme Court’s defense. Lerner argues that the standards do and should have differing tolerances based on the heinousness of the investigated crime.[182] And Kerr argues that the probable cause standard must have some flexibility to accommodate factors relating to an investigation—factors that fall outside the four corners of a warrant application but that the judge is likely to know and use.[183]

Hassle offers another reason to avoid fixing the probability thresholds for probable cause and reasonable suspicion. When the police investigate a crime with a low base rate, the suspicion rate can be lower than usual without significantly changing the hassle rates. For example, if police are investigating bribery, the crime is so infrequently committed that a few additional searches per bribe spread over an entire city’s population will not significantly affect the odds that a person will be searched.[184]

Hassle may also explain why courts might prove more lenient with suspicion requirements in crime-out investigations.[185] When police are pursuing suspects of a single crime, searching four or five suspects will not affect a town’s hassle rate even though the hit rate may fall below the usual 331/3% standard.[186]

4. The Search for Distributional Justice

Finally, hit and hassle rates are attractive to courts and civil rights lawyers searching for a measure of law-enforcement bias. Large investigation programs cause a lot of hassle even when their hit rates are high (and especially if they aren’t). If the hassle disproportionately affects a suspect class, this could be useful evidence of bias.

Racial disparities in hit rates can be a telltale sign of police bias: if searches conducted on cars driven by minorities result in the recovery of contraband less often than searches conducted on cars driven by whites, the disparity suggests that the department might suffer from explicit or implicit bias that leads officers to believe that minorities are more likely to be engaged in criminal conduct than they actually are.[187] But the comprehensive record of stops can also reveal disparate hassle rates, even if hit rates are the same across all races. Sure enough, hassle rates (stops or searches per one hundred thousand or sometimes per ten thousand inhabitants) are routinely used in disparate impact studies of police programs.[188]

For the last ten years, New York City’s police department has operated under record-keeping obligations,[189] and the resulting data were critical to the constitutional challenge to NYPD’s stop-and-frisk practices.[190] Judge Scheindlin was disturbed by the disparity between the rates at which black and Hispanic New Yorkers were stopped by NYPD and the rates at which whites were stopped.[191]

The government’s expert suggested that the demographics of New York City’s criminals would be unlikely to mirror the demographics of the city’s entire population.[192] If NYPD’s stop-and-frisk program operated on a smaller scale—or operated for the purpose of rooting out a narrowly defined criminal act—the government’s objections to Judge Scheindlin’s reasoning would have some weight. The demographics of suspects selected from nuanced profiles with a good hit rate are often likely to diverge from the general demographics. For example, algorithms designed to detect white-collar crime are more likely to direct investigations disproportionately on educated white men.[193] Likewise, if NYPD’s stop-and-frisk program succeeded in detecting crime, it would be appropriate for courts to permit some divergence between the demographics of the stopped and those of the whole population.[194] Establishing an appropriate baseline would have been difficult without good data. Judge Scheindlin was able to avoid the difficult task of establishing such a baseline by pointing to the program’s ineffectiveness. Because the vast majority of stops (94%) did not lead to arrest, bona fide predictors of crime could not explain the disparity in stops.[195] NYPD’s stop-and-frisk program had such a low hit rate, and was so active, that the enterprise consisted almost entirely of hassle. And that hassle had an outsize effect on minority communities.[196]